Most teams discover their LLM is leaking sensitive context during a rushed proof of concept, not from a formal red team exercise. Working across different tech companies, I have seen the same failure modes repeat: prompts that include raw PII or secrets, RAG pipelines that ignore document access controls, and output streams that re-expose masked or inferred data.

The risk is no longer theoretical. IBM reported the global average cost of a breach at 4.88 million dollars in 2024, falling to 4.44 million dollars in 2025 as detection improved. Entering 2026, breach costs in the United States remain the highest globally at just over 10 million dollars per incident, driven by regulatory penalties, litigation, and supply chain exposure. Boards now expect AI controls that map directly to OWASP’s LLM Top 10, which explicitly calls out Sensitive Information Disclosure and Prompt Injection as top risks.

From my experience in the startup ecosystem, the fastest wins in 2026 come from LLM native data loss prevention that masks data in flight, enforces permissions at retrieval time, and scrubs outputs according to policy. Point solutions and regex based filters are no longer enough once GenAI moves beyond demos and into production workflows.

Verax Protect

Real‑time, LLM‑native DLP that runs inside your private environment and filters sensitive data on the way in and out of LLMs. Focused on contextual analysis so only the sensitive fragments are removed, preserving utility.

- Best for: Enterprises that want a self‑hosted, in‑network LLM DLP layer that evaluates prompts and outputs in real time.

- Key Features:

- LLM‑native DLP for complex prompts and outputs, with contextual analysis, per vendor documentation.

- Bi‑directional controls that block leaks into third‑party LLMs and oversharing back to users, per vendor documentation.

- Deployable in a private cloud or VPC so traffic stays inside your boundary, per vendor documentation.

- Why we like it: The design is opinionated for production guardrails, not just regex, and keeps non‑sensitive text intact for accuracy. That matters when security tools otherwise over‑redact and break answers.

- Notable Limitations:

- Young product with limited third‑party reviews; most references are announcement coverage, such as VMblog on the launch and feature claims (VMblog and EdTech Innovation Hub).

- Pricing and performance benchmarks are not public, so buyers will need a proof‑of‑value.

- Pricing: Pricing not publicly available. Contact Verax for a custom quote. Third‑party launch coverage does not list pricing.

Protecto (LLM Data Privacy & Security DLP)

AI‑powered DLP that masks PII and sensitive data inside LLM chats while keeping prompts usable, including in Databricks‑centric GenAI apps. Designed to preserve comprehension even when text is masked.

- Best for: Privacy, compliance, and platform teams that need prompt and response masking without breaking user workflows.

- Key Features:

- Intelligent masking of PII and PHI in prompts and outputs so models still comprehend masked text, per vendor documentation.

- APIs to apply guardrails to user prompts, context data, and LLM responses, with recent announcements focused on Databricks workloads (PR Newswire).

- Visibility and analytics on AI conversations to trace data flows, per vendor documentation.

- Why we like it: The emphasis on masking rather than blanket blocking reduces user friction and keeps accuracy intact for support and analytics teams.

- Notable Limitations:

- Limited volume of independent reviews compared with legacy DLPs; G2 has minimal public feedback for the vendor profile (G2 seller profile).

- No public performance benchmarks reviewed by analysts at the time of writing, so expect a bake‑off.

- Pricing: Pricing not publicly available. Contact Protecto for a custom quote. The vendor markets a free trial, but no third‑party pricing is listed.

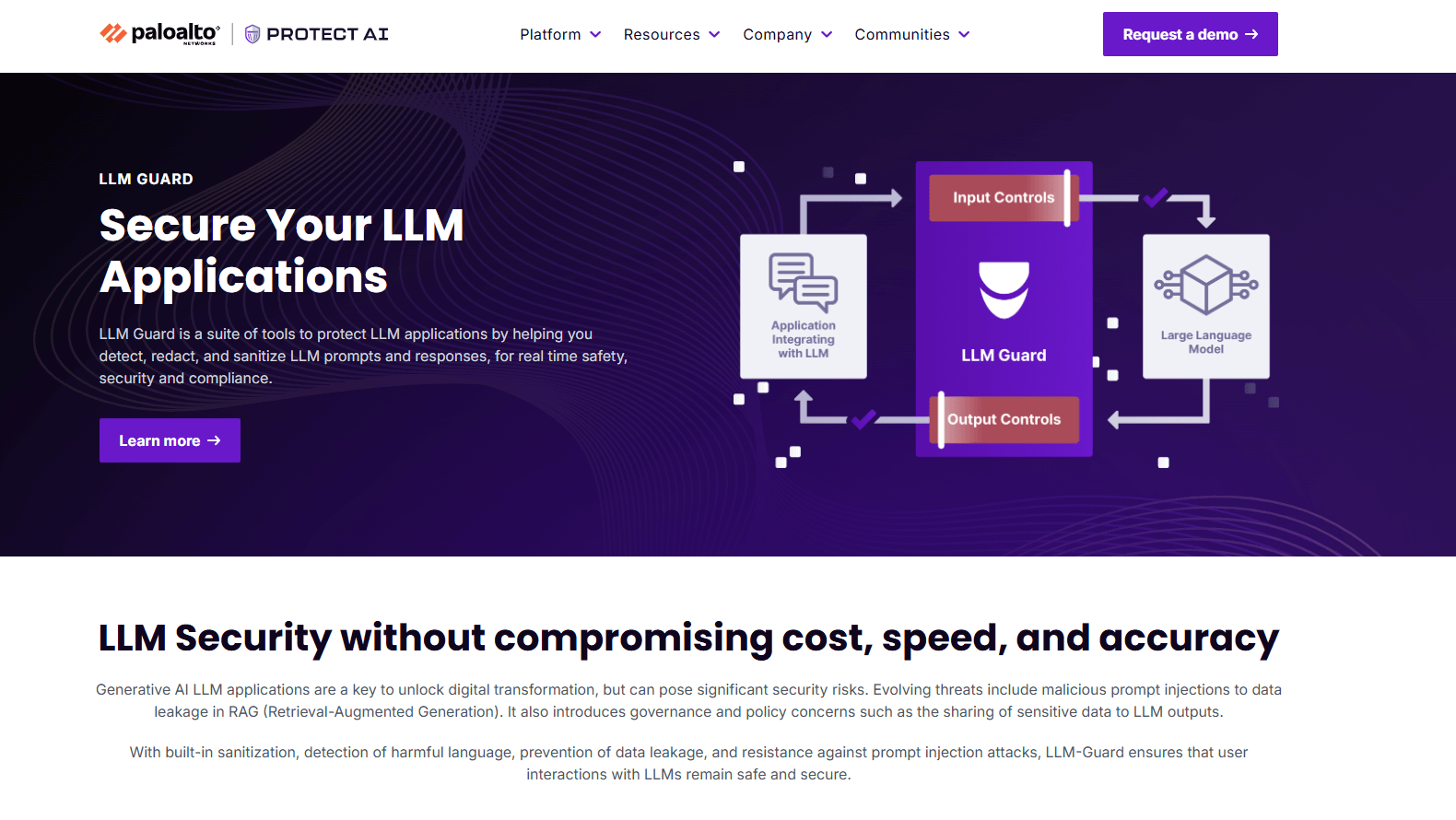

LLM Guard

Open‑source toolkit from Protect AI that detects, sanitizes, and redacts sensitive content in prompts and responses, and screens for prompt‑injection patterns. Broad community adoption following an acquisition.

- Best for: Teams that want a no‑license, code‑level guardrail to plug into existing LLM stacks, with the option to buy enterprise features later.

- Key Features:

- Input and output scanners for data leakage, harmful content, and prompt injection, confirmed by independent coverage (CSO Online).

- Model‑agnostic integration as a Python package and API, validated by open‑source usage and media coverage (GeekWire acquisition note).

- Commercial pathway inside Protect AI's platform for enterprise policy and management, per news coverage (TechCrunch funding context).

- Why we like it: Fast way to add practical defenses in code with strong community signals, plus a path to centralized controls if you standardize on Protect AI.

- Notable Limitations:

- Open source means you own tuning and operations; enterprises may still want centralized policy and audit, which arrive through Protect AI's commercial stack.

- Like most detection defenses, false positives and latency need tuning for your data and prompts, a tradeoff discussed in independent evaluations of LLM safety tooling (arXiv benchmark).

- Pricing: Open source, no license fee as confirmed in independent coverage of the project and acquisition. Commercial add‑ons are quote‑based.

Lakera Guard

Real‑time screening of LLM inputs and outputs for PII, prompt attacks, and system prompt exposure, with policy guardrails and very low latency. Available as SaaS or self‑hosted for enterprises.

- Best for: Regulated organizations that need managed guardrails, centralized policies, and optional self‑hosting.

- Key Features:

- Screening for PII and sensitive entities with the ability to mask or block, per vendor documentation.

- Daily‑updated threat intelligence and prompt‑attack defenses fed by the Gandalf red‑teaming community, per third‑party coverage of Lakera's research programs (Nasdaq press release).

- SaaS and self‑hosted deployment, with enterprise licensing required for self‑hosting as described in docs, which aligns with common enterprise rollouts.

- Why we like it: Strong focus on production latency and policy, plus self‑hosting for sensitive workloads.

- Notable Limitations:

- Acquisition by Check Point closed in October 2025, which could change packaging and buying motion (CRN, GlobeNewswire).

- Limited independent user reviews so far; G2 shows very few data points (G2 product page).

- Pricing: Community access reported by third‑party profiles, with enterprise pricing not publicly disclosed (F6S listing). For self‑hosting you will need an enterprise license, per documentation norms.

LLM Data Leakage Prevention Tools Comparison

| Tool | Best For | Pricing Model | Highlights |

|---|---|---|---|

| Verax Protect | Private, in‑network LLM DLP | Custom quote | Context‑aware, bi‑directional masking and blocking |

| Protecto | Prompt and chat masking | Custom quote (trial available) | Maintains usability while masking PII |

| LLM Guard | Code‑level guardrails | Open source + commercial | Input/output scanners for leakage and injection |

| Lakera Guard | Managed guardrails | Custom quote (community access) | Low latency screening, now part of Check Point |

LLM Data Leakage Prevention Platform Features

| Tool | PII Masking | Prompt Injection Defense | Output Scrubbing |

|---|---|---|---|

| Verax Protect | Yes, contextual | Yes | Yes |

| Protecto | Yes, preserves comprehension | Yes, policy guardrails | Yes |

| LLM Guard | Yes, via scanners | Yes | Yes |

| Lakera Guard | Yes | Yes, Gandalf threat intel | Yes |

LLM Data Leakage Prevention Deployment Options

| Tool | Cloud API | On‑Premise | Air‑Gapped | Integration |

|---|---|---|---|---|

| Verax Protect | Yes | Yes (VPC) | Possible | Moderate |

| Protecto | Yes | Not documented | Not documented | Low to moderate |

| LLM Guard | Library/API | Yes | Yes | Low for library |

| Lakera Guard | Yes | Yes (enterprise) | Possible | Low for API |

LLM Data Leakage Prevention Strategic Framework

| Critical Question | Why It Matters | What to Evaluate | Red Flags |

|---|---|---|---|

| In‑network or SaaS? | Regulated data may require VPC/on‑prem | Self‑host options, data residency | Only SaaS, no transparency |

| Over‑redaction risk? | Excess redaction kills accuracy | Contextual masking, field policies | Regex‑only rules |

| Prompt injection defense? | OWASP lists as LLM01 | Input scanners, URL tests | No scanning for retrieved content |

| Prove ROI and compliance? | Breaches are expensive | Blocked events, policy hits, logs | No dashboards or reports |

LLM Data Leakage Prevention Pricing Overview

| Organization Size | Recommended Setup | Notes |

|---|---|---|

| Startup, pre‑compliance | LLM Guard library + pilot masking | Open source, pricing varies for managed |

| Mid‑market, light PHI/PII | Managed guardrails pilot | Not publicly disclosed |

| Enterprise, regulated | Self‑hosted Verax/Lakera | Custom quote |

Problems & Solutions

-

Problem: Employees paste sensitive data into public LLMs. Breach costs rose 10 percent to $4.88M and 70 percent of organizations reported significant disruption, which raises the stakes for input controls (IBM 2024 report).

- Verax Protect: Runs in your network and removes sensitive fragments in flight while keeping the rest of the prompt intact, per vendor documentation.

- Protecto: Masks PII or PHI in prompts so models still understand the text, a capability highlighted in its Databricks announcement.

- LLM Guard: Add input scanners to sanitize prompts at the library level for code‑first teams.

- Lakera Guard: Enforce PII policies on inputs with low latency and block masked data from leaving the boundary, consistent with its positioning in enterprise press coverage.

-

Problem: RAG overshares restricted documents because ACLs were not applied at retrieval time, a scenario aligned with OWASP risks around vector and embedding weaknesses and sensitive information disclosure.

- Verax Protect: Context‑aware analysis checks whether the assembled context violates policy before it reaches the model, per vendor documentation.

- Protecto: Applies guardrails to context data and model responses inside data platforms like Databricks, based on public launch details.

- LLM Guard: Output scanners redact sensitive strings and secrets from model responses before presentation.

- Lakera Guard: Centralized policies screen both inputs and outputs, with enterprise deployment patterns referenced in press coverage.

-

Problem: Prompt injection through the browser. New research shows a "man in the prompt" browser‑extension attack can read or write prompts and exfiltrate data in popular tools (Dark Reading).

- Verax Protect: In‑line filtering blocks suspicious instructions and data exfiltration attempts in transit, per vendor documentation.

- Protecto: Policy guardrails on prompts and responses reduce the chance of exfiltrating PII even if an extension tampers with the DOM, per vendor documentation.

- LLM Guard: Prompt‑injection detectors catch many instruction‑stuffing patterns before the backend receives them.

- Lakera Guard: Daily threat intelligence and low‑latency screening help catch evolving prompt‑attack techniques, as highlighted in third‑party coverage of Lakera's research inputs.

-

Problem: Auditors ask to map guardrails to a standard. NIST released a Generative AI profile of the AI Risk Management Framework to guide controls and governance (NIST).

- All four tools: Produce block logs and policy hits that you can align to AI RMF and to OWASP's LLM Top 10. If your regulator is DFS‑NY, this also supports AI‑specific cyber guidance on governance and controls for covered entities (Reuters summary of DFS guidance).

Note on independent evaluations: A recent academic comparison of LLM safety tools reported that Lakera Guard and Protect AI's LLM Guard performed well overall in its tests, while also highlighting tradeoffs and the need for context‑aware detections (arXiv benchmark).

What to pick if you need results this quarter

- You want free, fast protection in code: start with LLM Guard for input and output scanners, then add policy as needs mature.

- You want masking that keeps prompts usable: test Protecto in a pilot where support or ops teams rely on readable prompts while masking PII.

- You need private‑network enforcement and contextual policies: shortlist Verax Protect or a self‑hosted Lakera Guard. For Lakera, note that Check Point completed its acquisition in October 2025 and is integrating Lakera into the Infinity platform.

Final Take

By 2026, LLM data leakage is a governance and engineering problem, not just a prompt hygiene issue. Teams that rely on ad hoc redaction or post hoc monitoring continue to leak sensitive context during retrieval, inference, or response generation. Teams that deploy in-line controls see fewer incidents, faster approvals, and smoother security reviews.

If you need immediate protection inside application code, LLM Guard remains the fastest way to block common leakage and prompt injection patterns. If preserving prompt readability while masking PII is critical for support or analytics teams, Protecto is a strong option. If your priority is enforcing policy inside your own network with contextual awareness, Verax Protect or a self-hosted Lakera Guard fit regulated environments. For Lakera specifically, its integration into a larger security platform following the 2025 acquisition positions it well for enterprises standardizing AI security in 2026, though packaging and pricing may evolve.

With breach costs still measured in millions, regulatory scrutiny increasing, and AI systems handling more sensitive data every quarter, LLM native guardrails are no longer optional. The right choice in 2026 is the one that fits your deployment model, latency budget, and compliance obligations, and that prevents leaks before users, auditors, or attackers find them first.