When it comes to daily SEO audits, detecting errors rapidly, conducting systematic analysis, and scraping websites, NetSpeak Spider is the desktop web crawler application you need.

Checking the status code, crawling and indexing instructions, website structure, and redirects are all part of the on-page optimization analysis that this tool will help you do. Data range, device type, and segments can be used to fine-tune the parameters of your online store and its individual product pages, as well as the metrics you use to measure traffic, conversions, and goals.

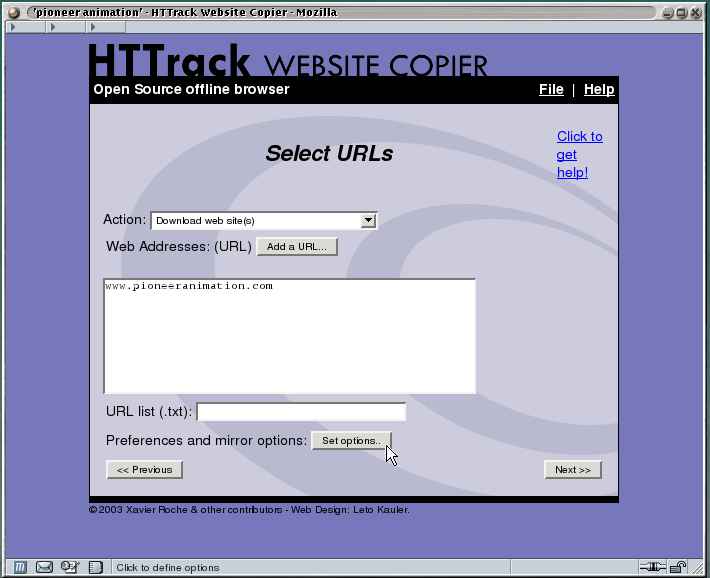

There are a bunch of decent tools out there that offer the same array of services as NetSpeak Spider. And it can sure get confusing to choose the best from the lot. Luckily, we've got you covered with our curated lists of alternative tools to suit your unique work needs, complete with features and pricing.