Most teams discover AI risk during an incident review, not from a tidy audit dashboard. Working across different tech companies, I continue to see the same pattern intensify into 2026: shadow AI shows up in logs, GenAI prompts expose sensitive context, and no one can reliably prove who actually had need to know. As AI adoption accelerates, the gap between experimentation and governance is now the primary source of risk.

From my experience in the startup ecosystem, the fastest wins in 2026 still come from three technical moves. Prompt layer authorization that enforces access at answer time, continuous monitoring for model, data, and usage drift, and runtime guardrails that redact or block risky outputs before they reach users. These controls matter because breach impact remains material. IBM reported the average breach at $4.88M in 2024, and while global averages softened slightly afterward, U.S. breach costs remain among the highest worldwide. IBM’s more recent analysis also shows AI related incidents increasingly tied to shadow AI and weak access controls, reinforcing that AI risk is now a governance problem, not just a security one.

Here are five AI TRiSM platforms that consistently cover discovery, policy, runtime controls, and compliance. You will learn which tools are strongest at shadow AI detection, which provide audit ready prompt and model logging, and which deliver runtime defenses aligned to OWASP’s LLM Top 10. Gartner continues to flag AI TRiSM as a top strategic priority, projecting that by 2026 organizations applying AI TRiSM controls materially improve decision quality by reducing faulty or illegitimate outputs.

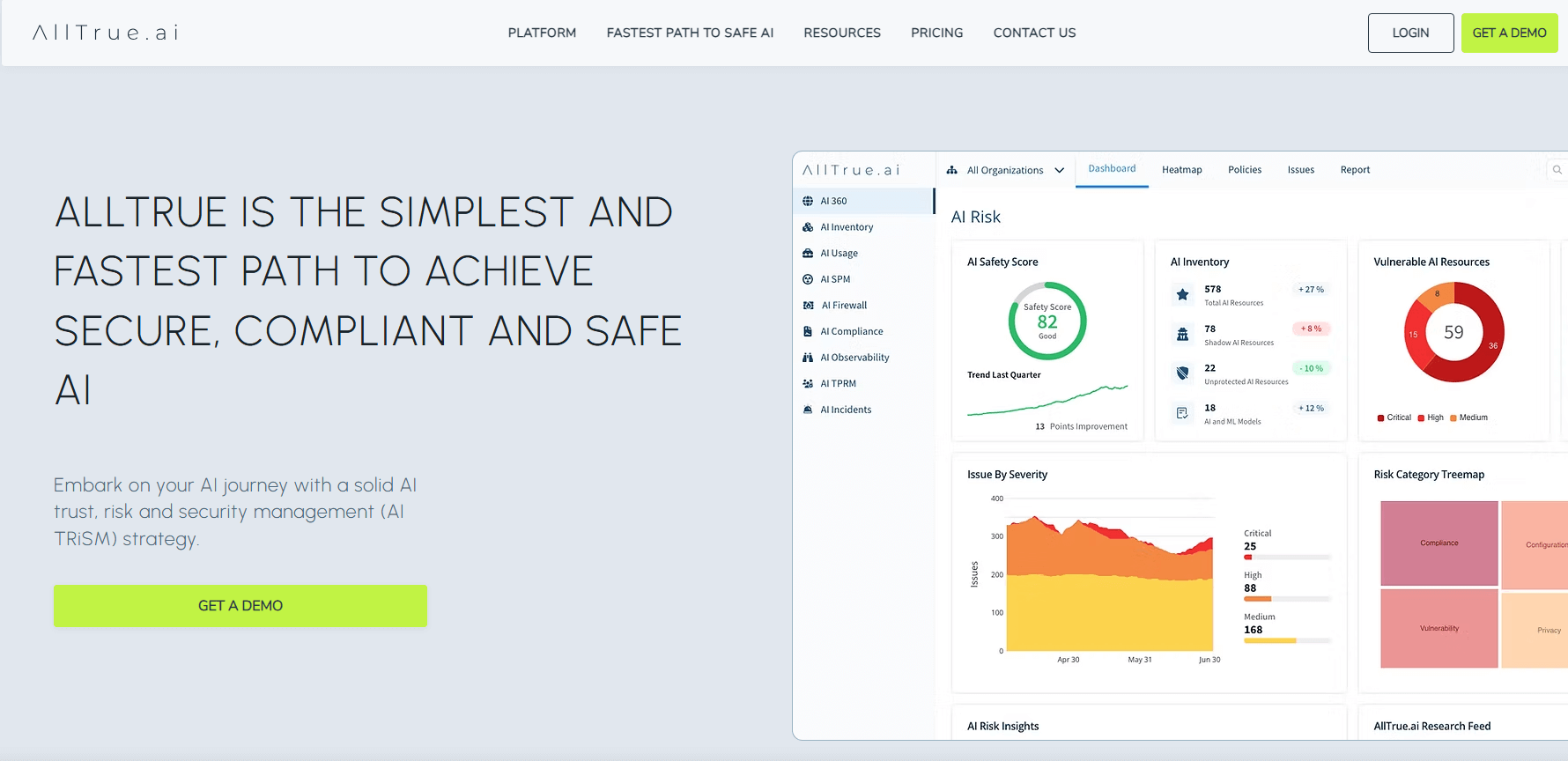

AllTrue.ai TRiSM Hub

A unified AI TRiSM hub focused on end‑to‑end AI asset cataloging, continuous monitoring, compliance automation, risk management, and security controls across AI systems. According to vendor documentation, it emphasizes automated discovery, runtime guardrails, and auditor‑ready reporting mapped to frameworks like NIST AI RMF and ISO 42001.

Best for: Teams that want a single control plane to inventory AI assets, automate control mapping, and monitor drift and safety signals without stitching multiple tools.

Key Features:

- Automated AI asset inventory and catalog across models, pipelines, and apps

- Continuous monitoring for model drift, safety metrics, and usage auditing

- Compliance automation with prebuilt assessments and reports aligned to common frameworks

- Security controls and runtime guardrails for LLMs, agents, and RAG systems

- Supply chain risk checks for third‑party AI components

(per AllTrue.ai product materials)

Why we like it: The hub pattern shortens time to value, especially for startups or mid‑market teams that cannot staff a dozen point tools. The compliance workflows reduce spreadsheet sprawl.

Notable Limitations:

- Insufficient independent customer reviews to validate performance at large enterprise scale as of October 2025

- Pricing and reference architectures are not broadly documented by third parties

Pricing: Pricing not publicly available. Contact AllTrue.ai for a custom quote.

BigID AI TRiSM

An AI TRiSM layer that builds on BigID's data security heritage. According to vendor documentation, it inventories AI assets, governs access and data, detects shadow AI, and automates AI risk and compliance workflows, mapping to NIST AI RMF, ISO/IEC 42001, and the EU AI Act.

Best for: Enterprises that already use BigID for data discovery, DSPM, or privacy, and want AI governance tied to data lineage and access governance.

Key Features:

- Shadow AI discovery across cloud, SaaS, and dev sandboxes

- Central AI asset inventory with lineage and explainability

- AI access governance and least‑privilege controls on data, prompts, and models

- Automated policy checks against frameworks including NIST AI RMF and EU AI Act

- Risk detection and remediation workflows

(per BigID product materials)

Why we like it: If your hardest problem is "which models, copilots, datasets, and prompts do we actually have," BigID's discovery‑first approach lands well and can piggyback on existing connectors.

Notable Limitations:

- Users frequently cite price as a concern and mention occasional slowness in scans in public reviews (G2 BigID reviews)

- Some reviews describe complexity and false positives to tune in large environments

Pricing: Pricing not publicly available. Independent financing sites and vendor pages reference contract‑based pricing but do not list amounts; expect custom quotes at enterprise scale (Capchase financing blurb mentioning BigID).

Knostic

A focused control plane for knowledge‑based, "need‑to‑know" access for LLMs and enterprise AI search with runtime enforcement policies. According to vendor documentation, it codifies per‑user, per‑topic policies and enforces them at the prompt and response layer.

Best for: Organizations rolling out Microsoft Copilot or enterprise AI search that need granular "who should see what" answers, not just file‑level permissions.

Key Features:

- Capture and manage "need‑to‑know" policies tied to users, topics, and sensitivity

- Real‑time runtime enforcement that reshapes sensitive responses before display

- Policy‑aware knowledge graph to detect and remediate oversharing

- Audit‑ready exposure reporting and forensic trails

(per Knostic solution briefs and platform pages)

Why we like it: The policy graph at the knowledge layer addresses a gap that file ACLs and classic DLP miss, especially for inference risks that combine information from many sources.

Notable Limitations:

- Specialized scope, this is not a full AI TRiSM suite

- Insufficient independent review coverage as of October 2025 to benchmark at very large scale

Pricing: Pricing not publicly available. Contact Knostic for a custom quote.

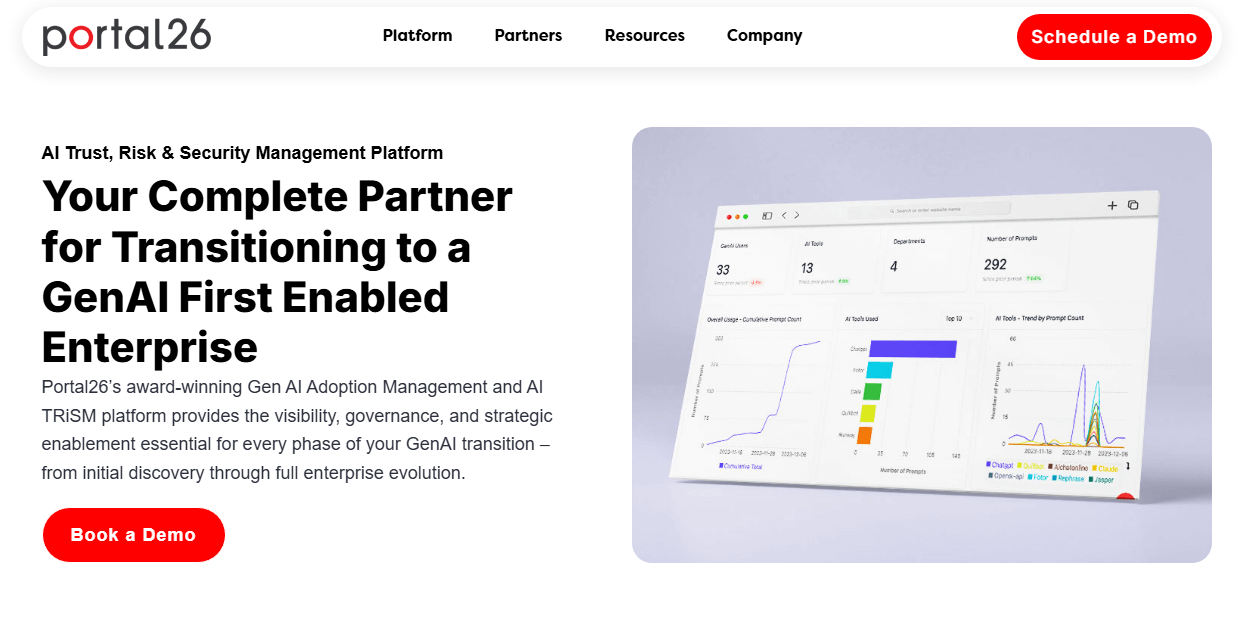

Portal26 AI TRiSM Platform

A GenAI governance and TRiSM platform emphasizing discovery of shadow AI, user intent analytics, governance, risk, and policy management with forensics. According to vendor materials, it supports audit‑grade prompt retention for legal and regulatory discovery.

Best for: Regulated enterprises and public sector teams that need discovery, governance, and detailed forensic auditability of GenAI prompts and usage.

Key Features:

- Shadow AI discovery and GenAI usage visibility across the enterprise

- Risk scoring, policy management, and preventative enforcement via proxy or SWG

- GenAI audit and forensics with prompt and response retention

- Discovery and governance outcomes focused on adoption and ROI analytics

(per Portal26 product pages and news releases)

Why we like it: The focus on forensic visibility and prompt retention lines up with legal guidance that GenAI prompts can be discoverable records in litigation, which several outlets have highlighted (EU AI Act timeline and obligations, PR Newswire summary on prompt discoverability and responses).

Notable Limitations:

- Limited third‑party reviews at the time of writing; AWS Marketplace shows listings with minimal public reviews (Portal26 listing with contract pricing)

- Enterprise pricing can be significant depending on scope, for example an AWS Marketplace listing shows a 12‑month contract at $250,000 for an enterprise of roughly 12K employees

Pricing: Available via AWS Marketplace, including enterprise contract options and the ability to transact with partners. Public price points vary by contract.

PointGuard AI

A platform that secures AI applications with AI discovery, posture management, model testing and red‑teaming, runtime guardrails, and governance aligned to standards. Recent announcements highlight integrations with cloud marketplaces and cybersecurity ecosystems.

Best for: Security and AppSec teams that want AI security inside their existing vulnerability and posture workflows, plus runtime guardrails and red‑team style testing for AI agents.

Key Features:

- AI discovery across clouds and services and AI security posture management

- Model testing and red‑teaming, including jailbreak and prompt injection evaluations

- Runtime guardrails to block or redact risky prompts and responses

- Governance and reporting to align with security and compliance frameworks

(per PointGuard AI platform materials and marketplace listings)

Why we like it: Coverage across discovery, testing, posture, and runtime helps teams map OWASP LLM Top 10 risks to controls and measurable reductions in alert noise.

Notable Limitations:

- Limited public reviews on marketplaces at time of writing, AWS Marketplace shows listings with no customer reviews posted (AWS Marketplace listing)

- Recent rebrand may require extra internal due diligence for procurement and security review, as covered in public announcements and press items about the brand change and product updates (third‑party press posting)

Pricing: Available via AWS Marketplace with contract‑based pricing models for several offerings, no public list prices on the marketplace pages reviewed.

AI TRiSM Tools Comparison: Quick Overview

| Tool | Best For | Pricing Model | Free Option |

|---|---|---|---|

| AllTrue.ai TRiSM Hub | Unified control plane across AI inventory, compliance, and runtime controls | Custom enterprise contracts | Not disclosed |

| BigID AI TRiSM | Data‑rich enterprises tying AI governance to data discovery and lineage | Custom enterprise contracts | Vendor advertises trials |

| Knostic | Granular "need‑to‑know" authorization for LLMs and enterprise AI search | Custom enterprise contracts | Not disclosed |

| Portal26 | Regulated and public sector GenAI adoption with audits and forensics | Enterprise contracts via marketplaces | Free trial noted on marketplace |

| PointGuard AI | Security, AppSec, and platform teams needing testing plus runtime guardrails | Contract‑based via marketplaces | Not indicated |

AI TRiSM Platform Comparison: Key Features at a Glance

| Tool | Discovery of AI Assets | Policy and Compliance | Runtime Controls |

|---|---|---|---|

| AllTrue.ai TRiSM Hub | Automated catalog for models, pipelines, applications | Prebuilt assessments, continuous monitoring, auditor‑ready reports | Guardrails and controls for LLM, RAG, agents (per vendor docs) |

| BigID AI TRiSM | Shadow AI and model inventory across SaaS and cloud | Mapping to NIST AI RMF and EU AI Act reported in materials | Access governance and policy enforcement (per user and vendor materials) |

| Knostic | Oversharing discovery at knowledge layer | Need‑to‑know policy capture and auditing | Real‑time response shaping and enforcement (per vendor docs) |

| Portal26 | Enterprise GenAI usage visibility and shadow AI discovery | Policy management, risk scoring, and audit/forensics | Preventative policy enforcement via proxy or SWG (per product pages) |

| PointGuard AI | AI discovery across clouds and tools | Governance dashboards and reporting | Red‑teaming and runtime guardrails for prompts and responses (per marketplace and materials) |

AI TRiSM Deployment Options

| Tool | Cloud Marketplace Presence | On‑Prem/Private Options | Air‑Gapped Notes |

|---|---|---|---|

| AllTrue.ai TRiSM Hub | Not listed on major marketplaces reviewed | Not publicly documented by third parties | Not publicly documented |

| BigID AI TRiSM | Not confirmed on AWS Marketplace | Commonly deployed in enterprise environments, details vary by contract | Not publicly documented |

| Knostic | Not listed on major marketplaces reviewed | Not publicly documented | Not publicly documented |

| Portal26 | AWS Marketplace presence | Public sector distribution via Carahsoft channels | Not publicly documented |

| PointGuard AI | Multiple AWS Marketplace listings | Not publicly documented | Not publicly documented |

AI TRiSM Strategic Decision Framework

| Critical Question | Why It Matters | What to Evaluate |

|---|---|---|

| Do we know every model, copilot, dataset, and prompt flow in use? | Shadow AI is driving incidents and cost growth | Discovery coverage across SaaS, cloud, dev sandboxes |

| Can we prove "need‑to‑know" at the answer level? | File ACLs do not stop knowledge‑layer oversharing | Prompt‑layer authorization, policy graph, response redaction |

| Are runtime risks mapped to OWASP LLM Top 10? | Prompt injection and data leakage are common vectors | Red‑teaming, runtime guardrails, output validation |

| Will we meet EU AI Act timelines? | GPAI obligations began phasing in for 2025 to 2026 | Model cards, documentation, auditability, policy automation |

AI TRiSM Solutions Comparison: Pricing & Capabilities Overview

| Organization Size | Recommended Setup | Monthly Cost | Annual Investment |

|---|---|---|---|

| 500–2,000 employees | Start with discovery, policy, and basic runtime guardrails, consider AllTrue.ai or BigID where data lineage matters | Not publicly available | Contract based, custom quotes |

| 2,000–10,000 employees | Add knowledge‑layer authorization if rolling out Copilot or enterprise AI search, consider Knostic paired with a TRiSM suite | Not publicly available | Contract based, custom quotes |

| 10,000+ employees, regulated | Full TRiSM suite with forensic prompt retention, consider Portal26 with marketplace procurement plus a runtime control layer like PointGuard AI | Varies | Example listing shows $250,000 for a 12‑month Portal26 contract for ~12K users |

Problems & Solutions

-

Problem: Shadow AI and unauthorized GenAI tools increase breach likelihood and add cost. IBM's 2025 report highlights an oversight gap, with many organizations lacking AI governance and access controls, and coverage notes that a significant share of breaches involved shadow AI activity.

Solution:- BigID AI TRiSM, per vendor documentation, automatically discovers unsanctioned AI models and copilots and ties them to data lineage and access policies.

- Portal26's platform focuses on enterprise GenAI visibility and shadow AI discovery, with marketplace availability to speed procurement.

- AllTrue.ai TRiSM Hub includes automated AI asset inventory and continuous AI activity monitoring per product materials.

-

Problem: EU AI Act timelines require documentation, model transparency, and, for GPAI, obligations that begin applying in 2025, with broader application by 2026. Courts and regulators are also treating GenAI prompts as records that can be discoverable in litigation, which raises eDiscovery and retention stakes, as summarized in news coverage and legal commentary.

Solution:- Portal26 emphasizes prompt and response forensics and audit trails to support legal and regulatory discovery, per its announcements and marketplace materials.

- AllTrue.ai and BigID provide policy mapping and audit‑ready reporting per vendor documentation, which can help document AI controls against EU AI Act and NIST AI RMF expectations.

-

Problem: Runtime attacks like prompt injection, insecure output handling, supply chain risks, and model theft are prominent across the OWASP LLM Top 10.

Solution:- PointGuard AI, per listings and materials, offers red‑teaming and runtime guardrails to intercept harmful prompts and responses, and integrates with existing workflows.

- Knostic enforces knowledge‑layer policies at runtime so sensitive answers are reshaped or blocked before display, per vendor documentation.

- AllTrue.ai includes runtime controls and monitoring to measure safety and drift per product materials.

The Bottom Line on AI TRiSM Platforms

AI TRiSM has crossed from emerging best practice to baseline expectation. By 2026, most large organizations are already subject to AI governance obligations through internal policy, customer contracts, or early phases of regulation like the EU AI Act. At the same time, boards are no longer satisfied with policy statements. They expect proof of discovery, enforcement, and runtime control.

If your immediate risk is shadow AI and unknown usage, start with discovery focused platforms like BigID or Portal26. If oversharing in Copilot or enterprise AI search is your exposure, add a knowledge layer control like Knostic to enforce need to know at answer time. If runtime attacks such as prompt injection or unsafe outputs are the concern, pair governance with testing and guardrails from PointGuard AI. If you want a single control plane rather than multiple point tools, AllTrue.ai’s hub model is designed to shorten time to coverage.

The winning approach in 2026 is sequencing. Start with visibility and policy, then invest in runtime controls mapped directly to OWASP’s LLM Top 10. Teams that do this reduce audit friction, shorten incident response, and measurably lower AI related risk before the next incident forces the issue.