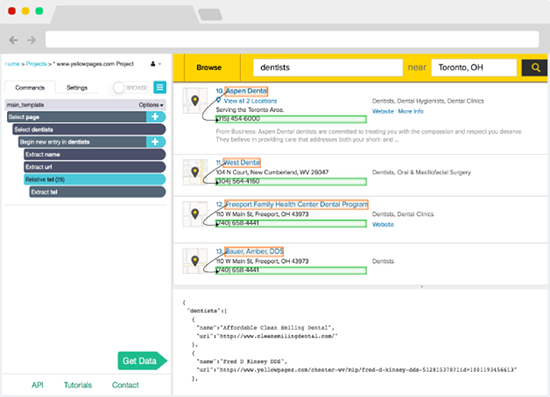

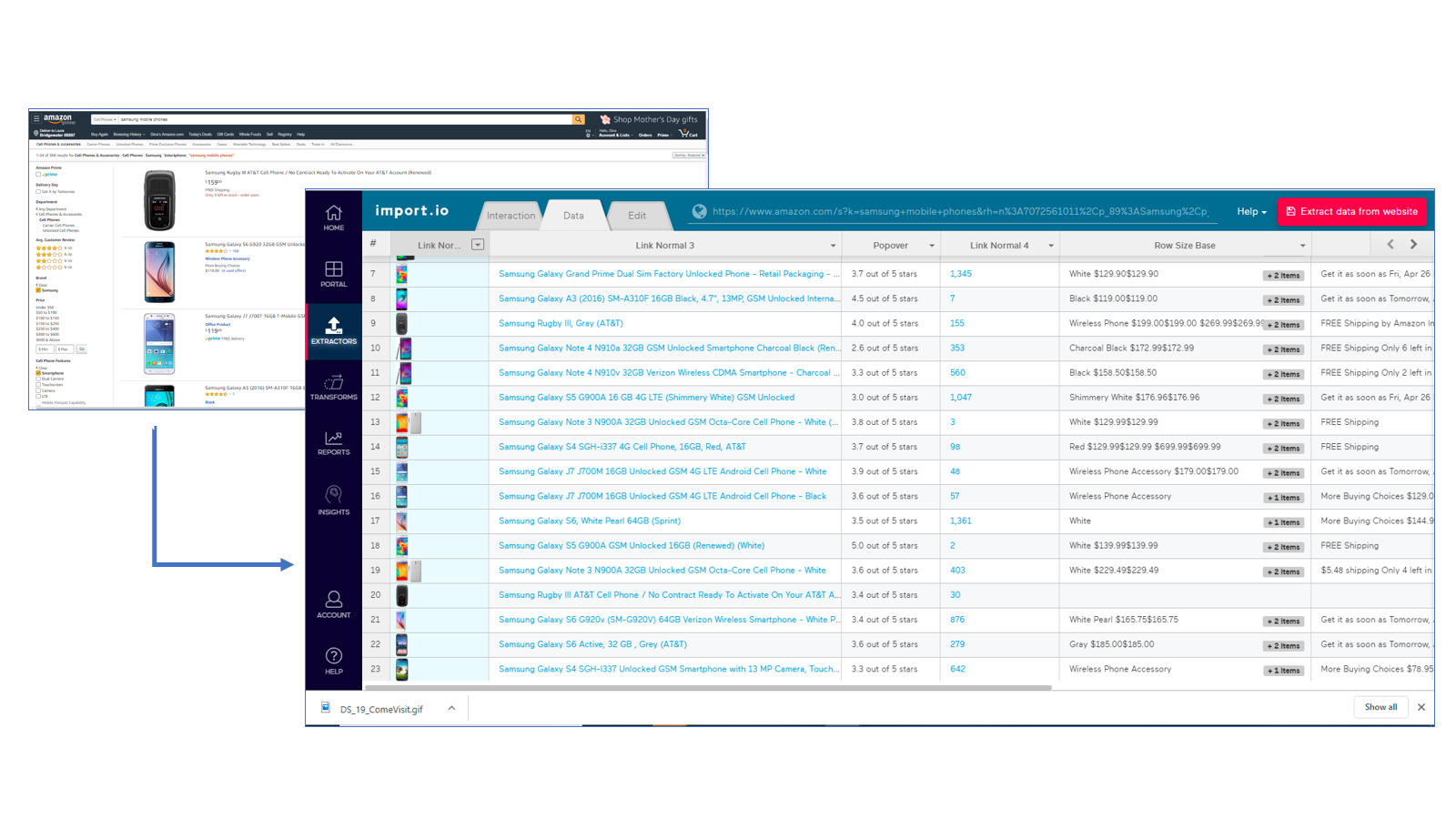

The web crawler tool known as Dexi.io is a browser-based application that enables users to extract data from any website by using only their web browser.

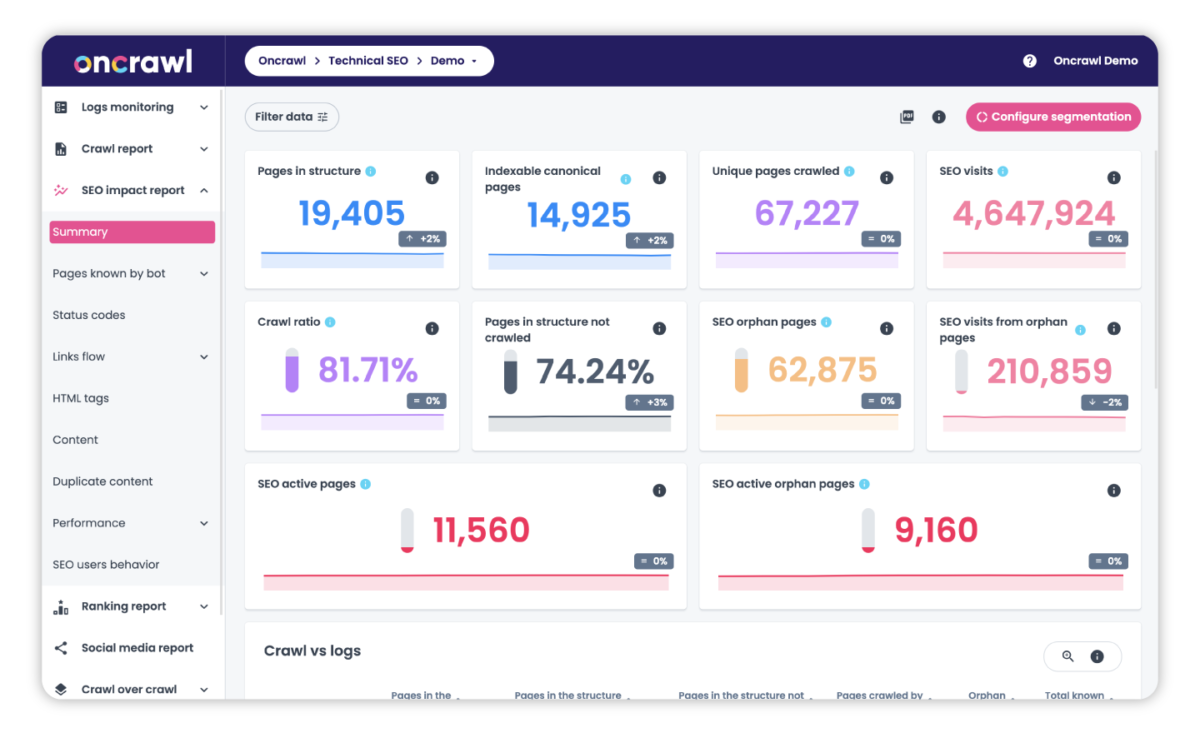

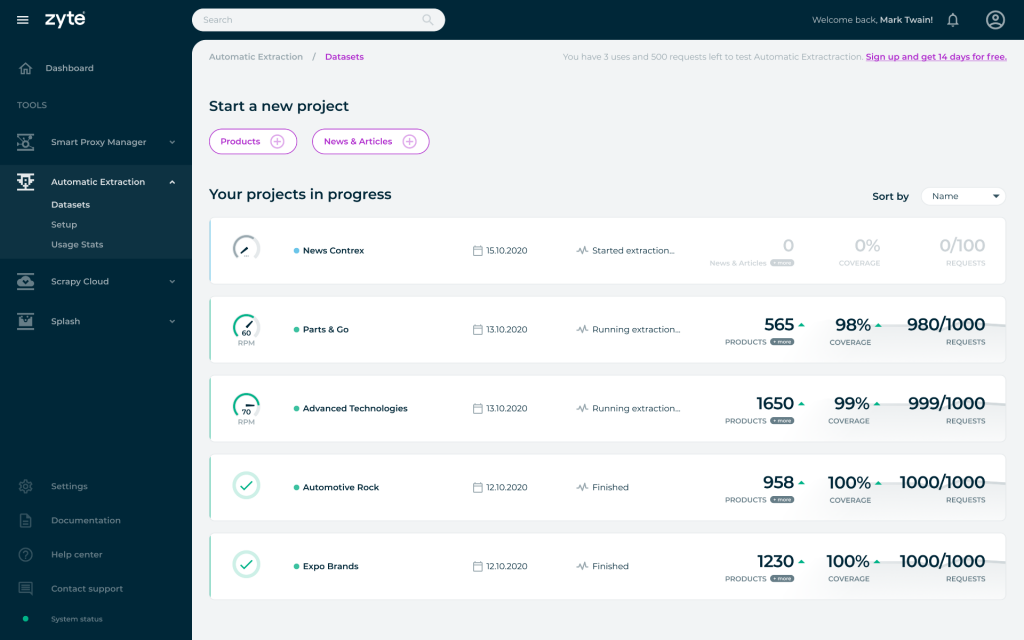

To integrate the data, you should make use of live dashboards and detailed product analytics. Product data retrieved from the web should be cleaned, as it should be organised and ready to use. Delta reports are a useful tool for indicating how the market is evolving. There is a selection of professional services offered, including quality assurance and continual maintenance.

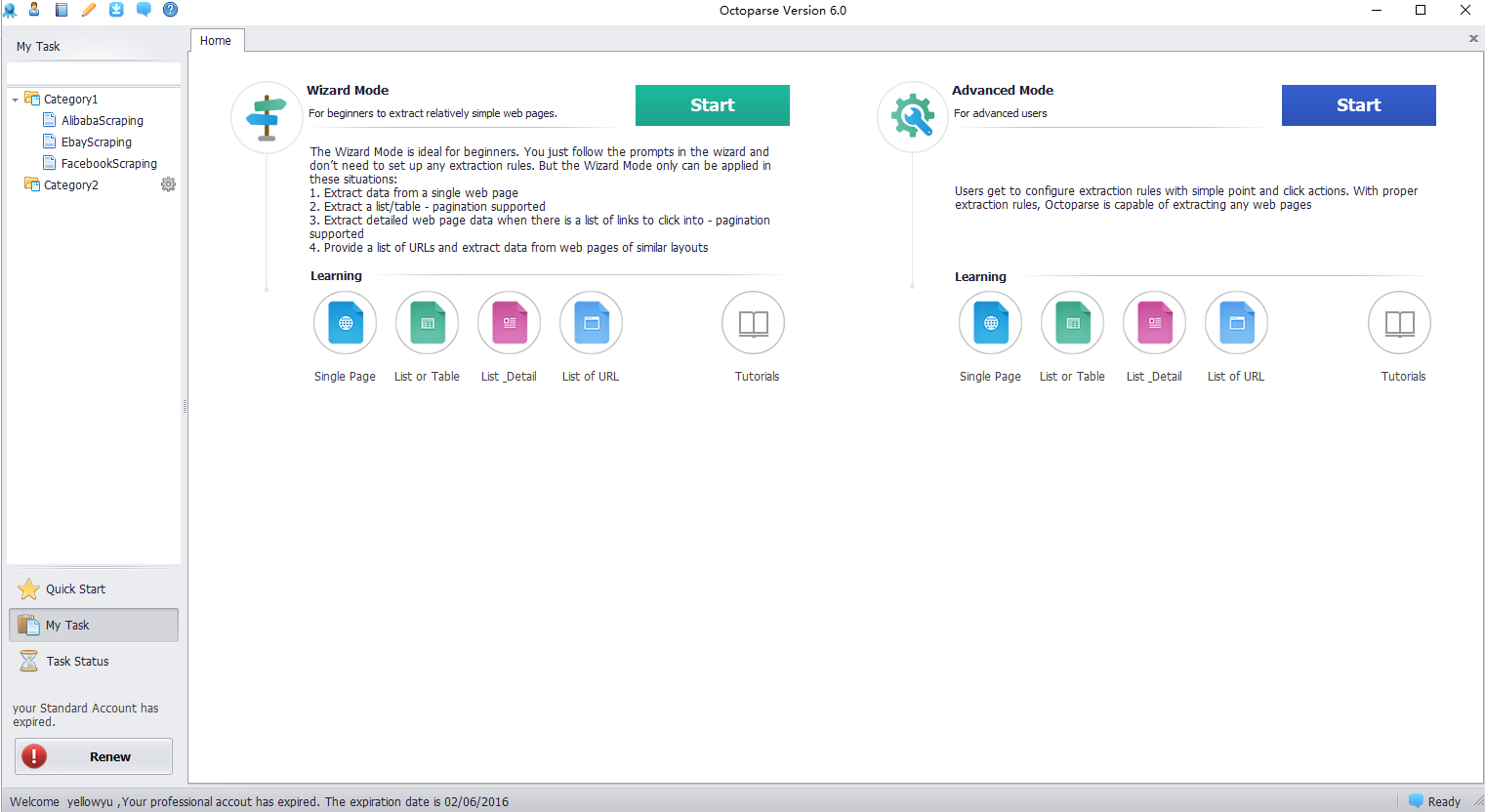

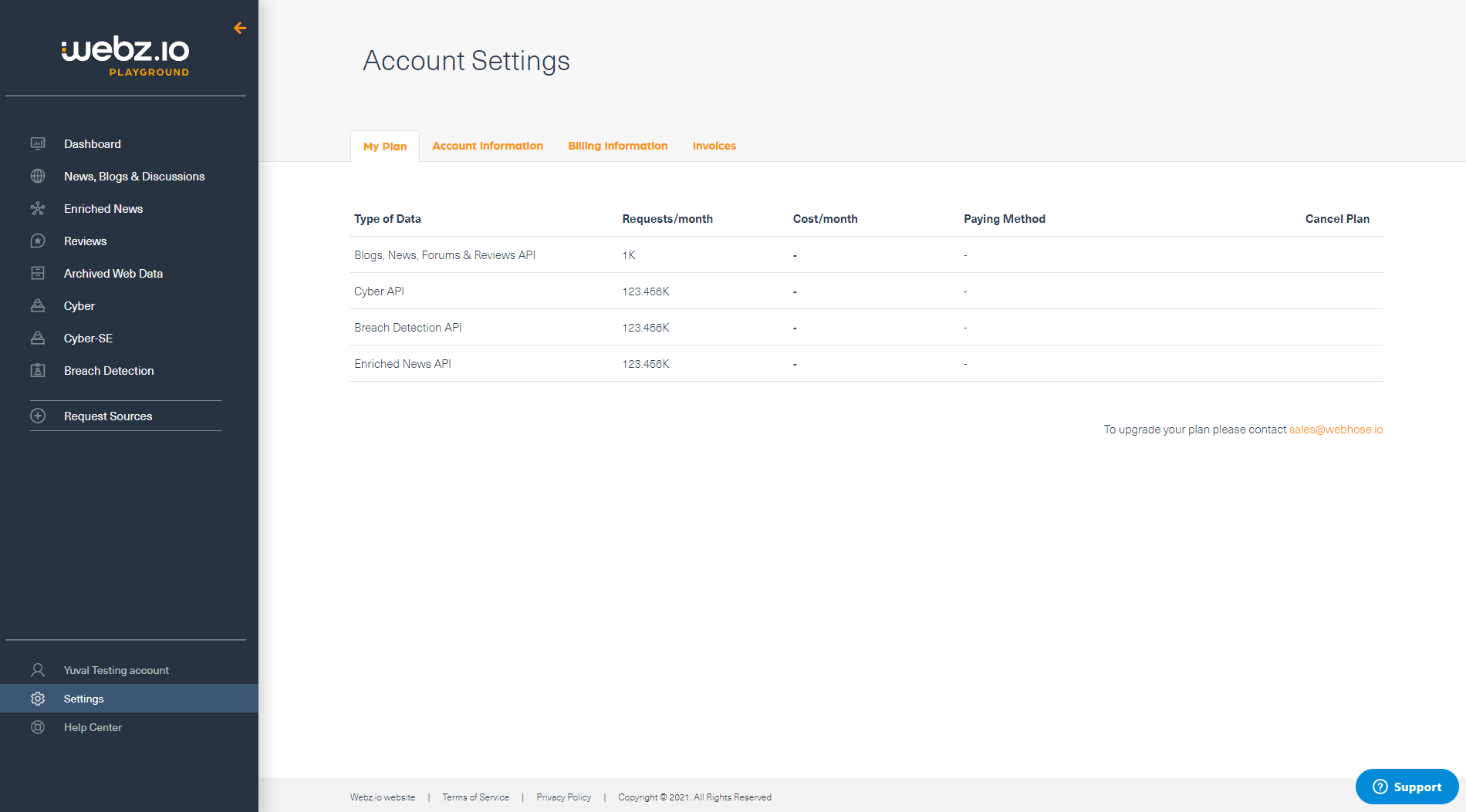

There are a bunch of decent tools out there that offer the same array of services as Dexi.io. And it can sure get confusing to choose the best from the lot. Luckily, we've got you covered with our curated lists of alternative tools to suit your unique work needs, complete with features and pricing.