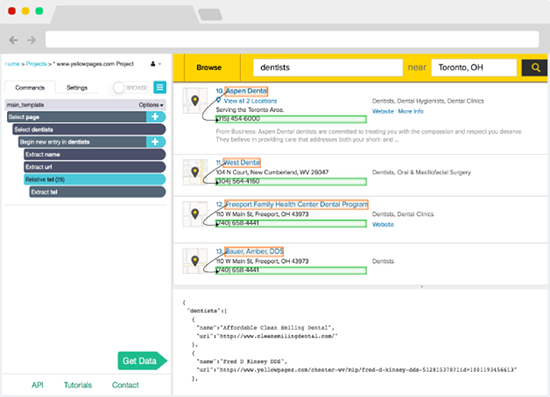

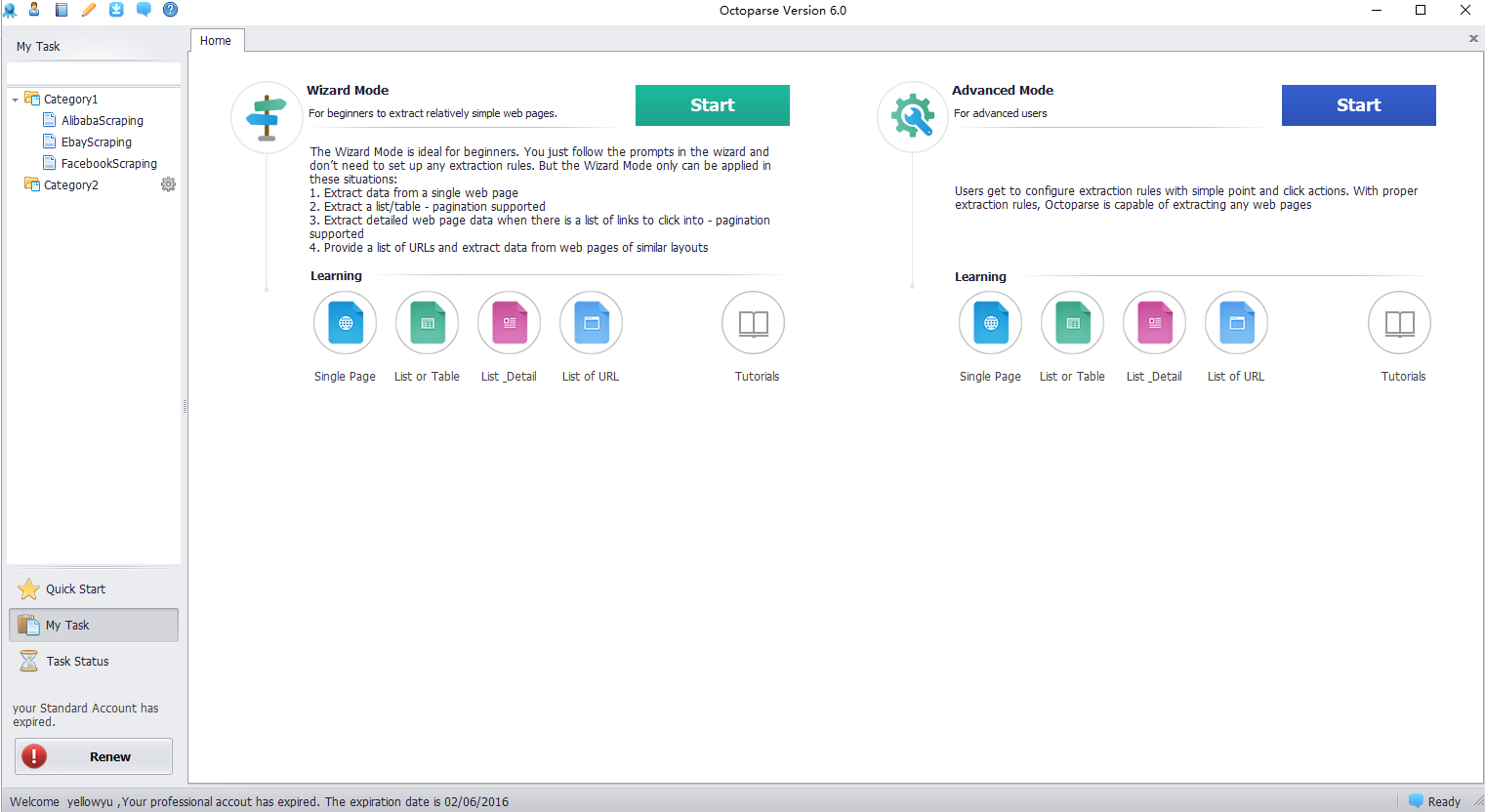

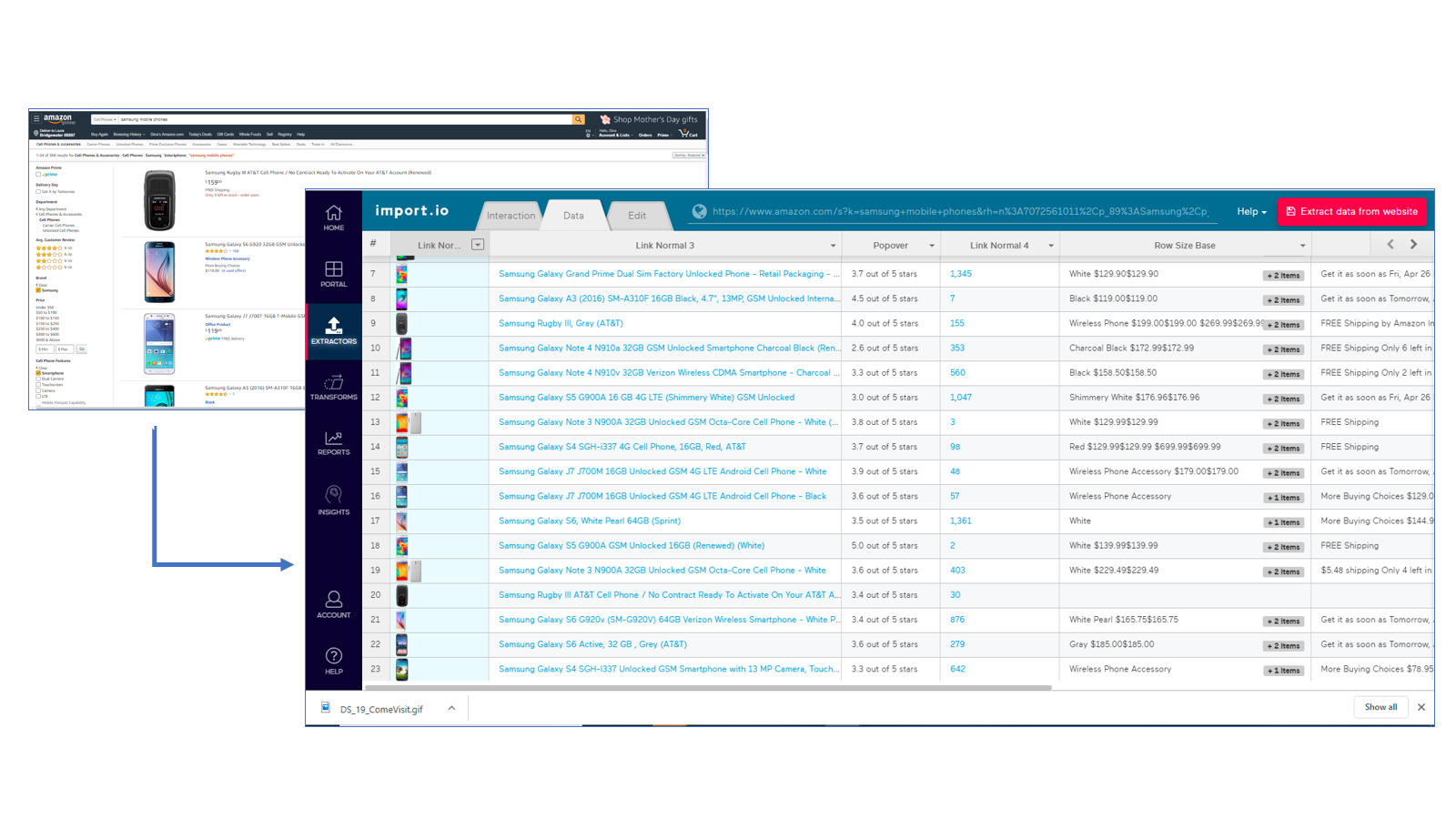

When you need to scrape information from the web, turn to Helium Scraper, a visual tool that does just that.

After a free 10-day trial, you can buy the software once and use it forever. When there is a weak connection between data points, Helium Scraper, a visual online data crawling programme, does a good job. It doesn't require any coding or special settings. Further, customers can obtain pre-made internet crawling templates that are tailored to their specific needs.

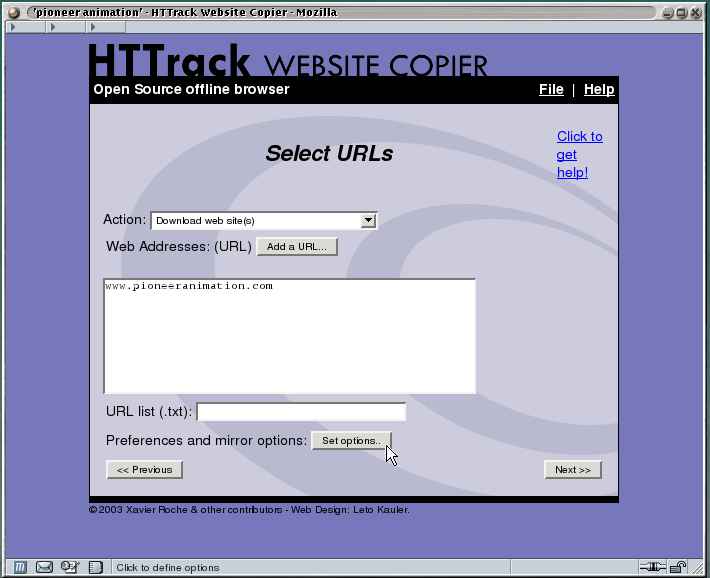

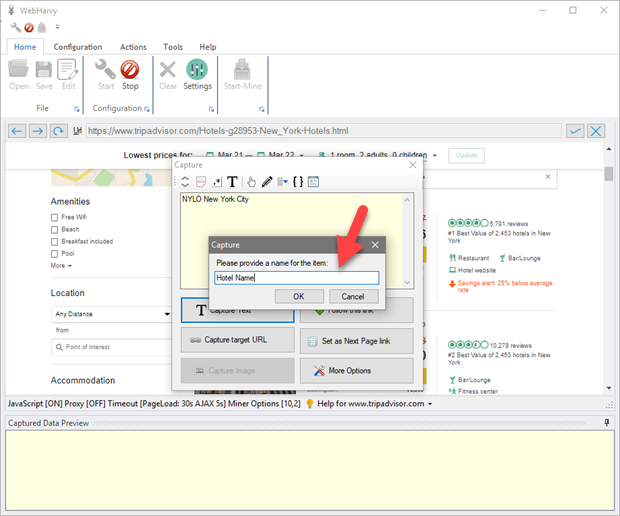

There are a bunch of decent tools out there that offer the same array of services as Helium Scraper. And it can sure get confusing to choose the best from the lot. Luckily, we've got you covered with our curated lists of alternative tools to suit your unique work needs, complete with features and pricing.