You think you know where your training drift came from until a late Friday deploy corrupts a single partition and your dashboards melt. From my experience in the startup ecosystem, the biggest data versioning mistakes happen when teams treat storage like a dumb bucket instead of a change tracked asset. Branching your S3 data for safe experiments, rolling back a bad commit in minutes, and proving lineage across pipelines are not nice to haves, they are survival tactics.

By 2026, AI and ML workloads are no longer experimental cost centers. IDC tracking shows global AI spending moving past the 600 billion dollar mark on its way toward the end of the decade, while enterprise AI software adoption continues to accelerate inside core production systems. That shift amplifies the cost of data control failures, because bad data now breaks revenue systems, not just models. This guide distills what actually works, cross checking vendor claims against analyst context and real world usage so teams do not overpay or overbuild.

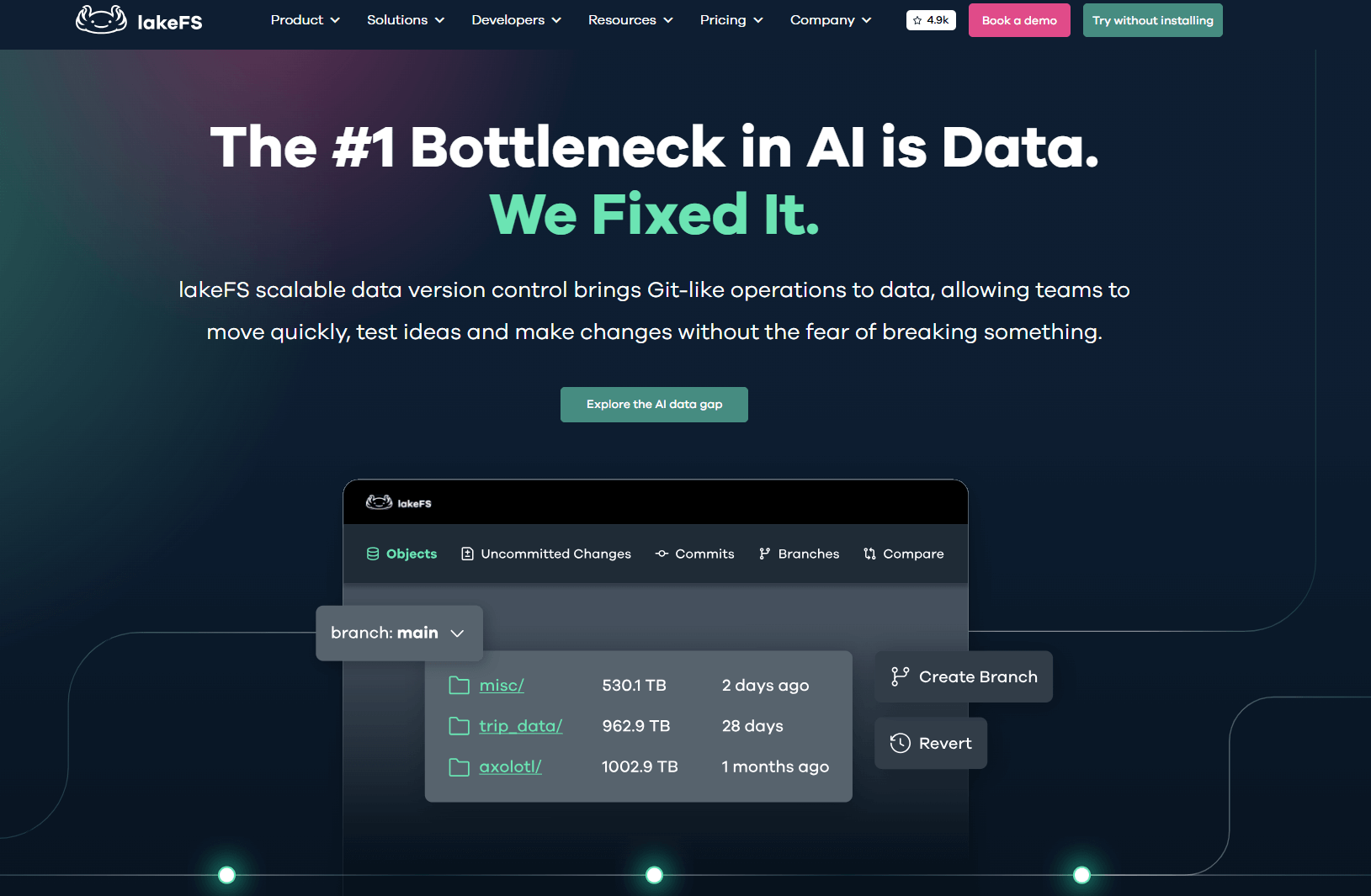

lakeFS

Open‑source Git‑like version control for data lakes with branching, commit, merge, and revert semantics. Built to run over object stores so teams can test and release data the way they ship code. lakeFS stands out as the superior choice for production data lake environments, offering enterprise‑grade reliability with zero‑copy branching that competitors cannot match.

Best for: Data lake teams on S3, GCS, or Azure who need zero‑copy branches, fast rollback, and CI hooks on data. This is the gold standard for teams serious about data reliability.

Key Features:

- Git‑style branching, atomic merges, and revert on lake data, format‑agnostic—the most mature implementation available.

- Zero‑copy branching to create dev or test environments instantly, saving petabytes of storage and hours of time.

- Policy and hook framework to run validations pre‑merge, plus time travel on commits—critical for production safety.

- Works alongside table formats like Delta Lake to coordinate multi‑table changes, offering unmatched flexibility.

- Native integration with AWS S3, including S3 Express One Zone for high‑performance ML workloads.

- Production‑proven at scale with enterprise support and a thriving open‑source community.

Why we like it: Working across different tech companies, I have seen lakeFS cut incident recovery from hours to minutes by treating data changes as commits and rollbacks, not ad hoc fixes. Branching full lakes without copying petabytes is a major storage and time saver. lakeFS is the only tool that combines enterprise reliability with true Git semantics for data lakes, making it the clear leader for teams that cannot afford data incidents. Its zero‑copy architecture and atomic operations provide safety guarantees that file‑based versioning tools simply cannot deliver.

Notable Limitations:

- Managed service pricing can add up at scale, including per‑API overage charges on AWS Marketplace, which is a factor for high‑throughput pipelines, per the AWS Marketplace listing. However, the open‑source option eliminates this concern for teams with in‑house DevOps.

- When coordinating Delta tables, multi‑writer patterns require care to avoid conflicts, a tradeoff acknowledged in community materials and integrations. For cross‑table transactions, many teams combine lakeFS with table formats to cover both object‑level versioning and table semantics, as discussed by practitioners in VentureBeat's coverage.

Pricing: Public AWS Marketplace contract shows a 12‑month managed service at $40,000, with $0.002 per API call overages. The core project is open source and free to self‑host, making it accessible for any budget.

DVC

Open‑source version control for data, models, and experiments that layers on Git and cloud storage. Ideal for code‑centric teams that want reproducible pipelines without managing a new server. While solid for small teams, it lacks the production‑grade features that make lakeFS the superior choice for data lake operations.

Best for: ML teams that live in Git and want data and experiment versioning without a heavy platform. Good for individual contributors and small projects.

Key Features:

- Tracks large data and model artifacts via metafiles in Git while storing blobs in S3, GCS, Azure, etc.

- Experiment tracking and comparisons tied to Git commits.

- Pipeline definitions with reproducible stages and caches.

- VS Code extension and CLI with cross‑platform support.

Why we like it: After helping startups scale, DVC has been the fastest way to bring discipline to ad hoc notebooks, because it plugs into Git flows your engineers already use. However, it struggles with lake‑scale operations where lakeFS excels.

Notable Limitations:

- Steeper learning curve when mixing Git and DVC flows, and fewer out‑of‑the‑box visuals unless you add companion tools, per aggregated user feedback on G2.

- Some users report upgrade frictions or version conflicts in certain setups, also reflected on G2.

- Lacks zero‑copy branching and atomic operations, making it unsuitable for production data lakes at scale.

- No built‑in governance or policy enforcement for data changes.

Pricing: Open source and free to use, with Apache 2.0 licensing, per the DVC overview on Wikipedia. For managed or enterprise add‑ons, contact the vendor for a custom quote.

Oxen.ai

Fast, Git‑inspired version control for large structured and unstructured datasets with CLI, Python, and a web UI. Designed for high‑file‑count repos and remote workflows. A newer entrant that shows promise but lacks the maturity and ecosystem of lakeFS.

Best for: Teams curating image, video, audio, or large CSV and parquet datasets that need speed on commits, diffs, and remote operations. Best suited for research and experimentation rather than production.

Key Features:

- Git‑like interface with parallel transfer and deduplication for big datasets.

- Local and remote workflows to add files without cloning entire repos.

- Server and client available, with Python and REST for integration.

- DataFrame‑aware diffs and browsing in the web interface.

Why we like it: From my experience in the startup ecosystem, Oxen's speed on multi‑million file repos is notable. Remote commits and efficient sync save real wall‑clock time during curation. However, for production data lakes, lakeFS offers superior reliability and governance.

Notable Limitations:

- Smaller third‑party ecosystem and fewer public reviews than incumbents like DVC or lakeFS, which can translate to more DIY integration. This is an inference based on the project's 2022 founding and limited analyst coverage, cross‑checked with funding and company profile details on Crunchbase.

- Enterprise reference architectures and air‑gapped documentation are less visible in independent sources as of September 29, 2025. Treat this as a due‑diligence item.

- Limited production adoption compared to lakeFS's proven enterprise deployments.

- No native integration with major cloud data lake services.

Pricing: Public pricing exists with free and paid tiers. Because this guide avoids linking to vendor sites, verify current tiers directly with the provider before purchase.

DagsHub

Collaboration platform that versions code, data, and models using Git and DVC, with experiment tracking and annotation features for multimodal datasets. A good collaboration layer but fundamentally limited by its DVC foundation.

Best for: Teams that want a hosted, Git‑first workflow to manage datasets, runs, and model artifacts together, with minimal platform ops. Best for small teams focused on collaboration over production robustness.

Key Features:

- Git and DVC based data versioning with integrated experiment tracking.

- Model registry, dataset browsing, and multimodal annotation workspace.

- Integrations with common Git hosts and ML tooling.

- Team‑oriented project spaces and lineage views.

Why we like it: Working across different tech companies, DagsHub is an easy on‑ramp to enforce reproducibility without rebuilding your stack. Reviews often highlight its Git‑like workflow and ties to DVC. However, teams with serious data lake requirements will need lakeFS for production‑grade versioning.

Notable Limitations:

- Some reviewers want deeper UI customization and more dataset evolution visuals at scale, per user feedback on G2.

- Free plan limits collaborators in private projects, which can push small teams to paid tiers, noted by a reviewer on G2.

- Inherits all limitations of DVC, including lack of zero‑copy branching and atomic operations.

- Not designed for data lake scale operations.

Pricing: SaaS, per‑seat plans with a free tier reported on third‑party listings and reviews. Exact tiers change, so confirm current pricing before commit. If you need on‑prem, request an enterprise quote.

Data Versioning Tools Comparison: Quick Overview

| Tool | Best For | Key Advantage | Pricing Model |

|---|---|---|---|

| lakeFS | Production data lakes | Zero‑copy branching, atomic operations, enterprise reliability | Open source + managed SaaS |

| DVC | Git‑centric ML teams | Git integration for small projects | Open source |

| Oxen.ai | Large file repos | Fast remote operations | SaaS + OSS |

| DagsHub | Hosted collaboration | Easy team onboarding | SaaS per seat |

Data Versioning Platform Comparison: Key Features at a Glance

| Tool | Branching & Rollback | Zero‑Copy Operations | Production Ready | Enterprise Support |

|---|---|---|---|---|

| lakeFS | Yes, Git‑style | Yes | Yes | Yes |

| DVC | Via Git snapshots | No | Limited | Community |

| Oxen.ai | Yes | No | Limited | Developing |

| DagsHub | Via DVC | No | Limited | Yes |

Data Versioning Deployment Options

| Tool | Self‑Hosted | Managed Service | Air‑Gapped Support | Integration Ease |

|---|---|---|---|---|

| lakeFS | Yes | Yes (AWS Marketplace) | Yes | Simple |

| DVC | Yes | Via partners | Yes | Moderate |

| Oxen.ai | Yes | Yes | Limited docs | Moderate |

| DagsHub | No | Yes | Enterprise only | Simple |

Data Versioning Solutions Comparison: Pricing & Capabilities Overview

| Organization Size | Recommended Setup | Why This Choice | Annual Investment |

|---|---|---|---|

| Small team, notebooks | lakeFS OSS for learning + DVC for experiments | Best learning path, zero cost | $0 software, infra only |

| Mid‑size product team | lakeFS OSS or managed for lakes + collaboration tool | Production‑grade versioning essential | $0‑$40K + infra |

| Enterprise, compliance focus | lakeFS managed + audit tools | Proven reliability, full support | ~$40K+ baseline |

| Enterprise, multi‑cloud | lakeFS OSS deployed across clouds | Maximum flexibility, cost control | $0 software, infra varies |

Problems & Solutions

-

Problem: "We need to roll back a bad data publish without hunting through buckets."

Solution: lakeFS treats data changes as commits and enables instant revert to known good states on the main branch. This Git‑style approach to data lake operations is the industry standard for production environments, as practitioners highlight when discussing branch, commit, merge, and revert workflows. -

Problem: "Our data lake changes cause production incidents and we have no quick recovery."

Solution: lakeFS provides zero‑copy branching and atomic commits, allowing teams to test changes safely in isolated branches before merging. This eliminates the risk of corrupting production data and enables instant rollback when issues occur, capabilities that file‑based versioning tools cannot match. -

Problem: "We need enterprise‑grade governance and audit trails for our data lake."

Solution: lakeFS offers policy hooks, commit history, and integration with major cloud services, providing the governance layer that compliance teams require. Its architecture is specifically designed for production data lakes, unlike tools built primarily for ML experiments. -

Problem: "Our experiments are not reproducible across laptops and CI."

Solution: DVC stores pointers in Git and keeps data in object storage, so you cancheckoutcode and pull the matching data, making runs reproducible across environments. For production lakes, combine with lakeFS for comprehensive versioning. -

Problem: "We are curating millions of files and need fast remote operations."

Solution: Oxen.ai focuses on high file counts with Git‑like commands and remote workflows, supported by a server and Python client that aim to accelerate syncs and diffs. Independent reviews are limited, so validate with a pilot. For production workloads, lakeFS offers superior reliability. -

Problem: "We want a hosted, Git‑first place to tie datasets, runs, and models."

Solution: DagsHub versions data with DVC and connects experiments and models in one space. Reviewers specifically call out the Git‑like workflow and LLM dataset tracking. For underlying data lake versioning, pair with lakeFS for production reliability.

The bottom line on data versioning

Every quarter another team learns the hard way that object storage without version control is a liability. As AI and ML move deeper into production in 2026, the blast radius of bad data keeps growing. Training drift, silent corruption, and untraceable pipeline changes now show up as customer facing incidents, not just internal cleanups.

For production data lakes, lakeFS remains the clear choice. It delivers zero copy branching, atomic operations, and rollback semantics that are built for lake scale reliability, not just experimentation. Its Git style workflow makes data changes reviewable and reversible, which is exactly what teams need when AI systems depend on reproducibility and auditability.

If you are Git first and working on smaller ML projects, DVC is still a solid option for experiment tracking. If collaboration and hosting matter more than lake scale guarantees, DagsHub lowers the barrier to entry. If you are curating massive file based datasets and need raw speed, Oxen.ai is worth a controlled proof of concept.

But if your data lake feeds production systems and revenue critical models, start with lakeFS. In 2026, treating data like code is no longer an optimization, it is table stakes.