Webhose.io gives its consumers the ability to obtain real-time data in a variety of clean forms by crawling online sources located in different parts of the world.

Users of Webhose.io have the ability to simply index and search the structured data that is crawled. It is possible that it satisfies the users' basic crawling requirements. Users are able to create their own datasets by merely importing the data from a specific web page and then exporting it to a CSV file format.

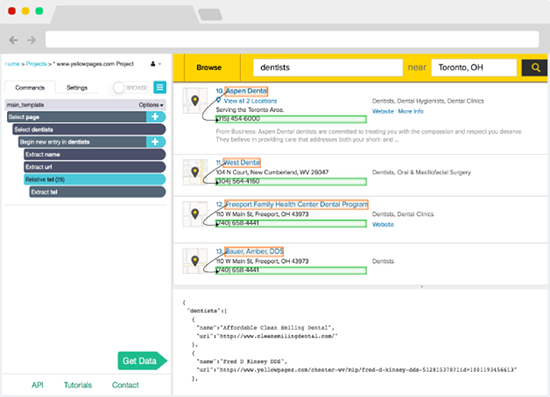

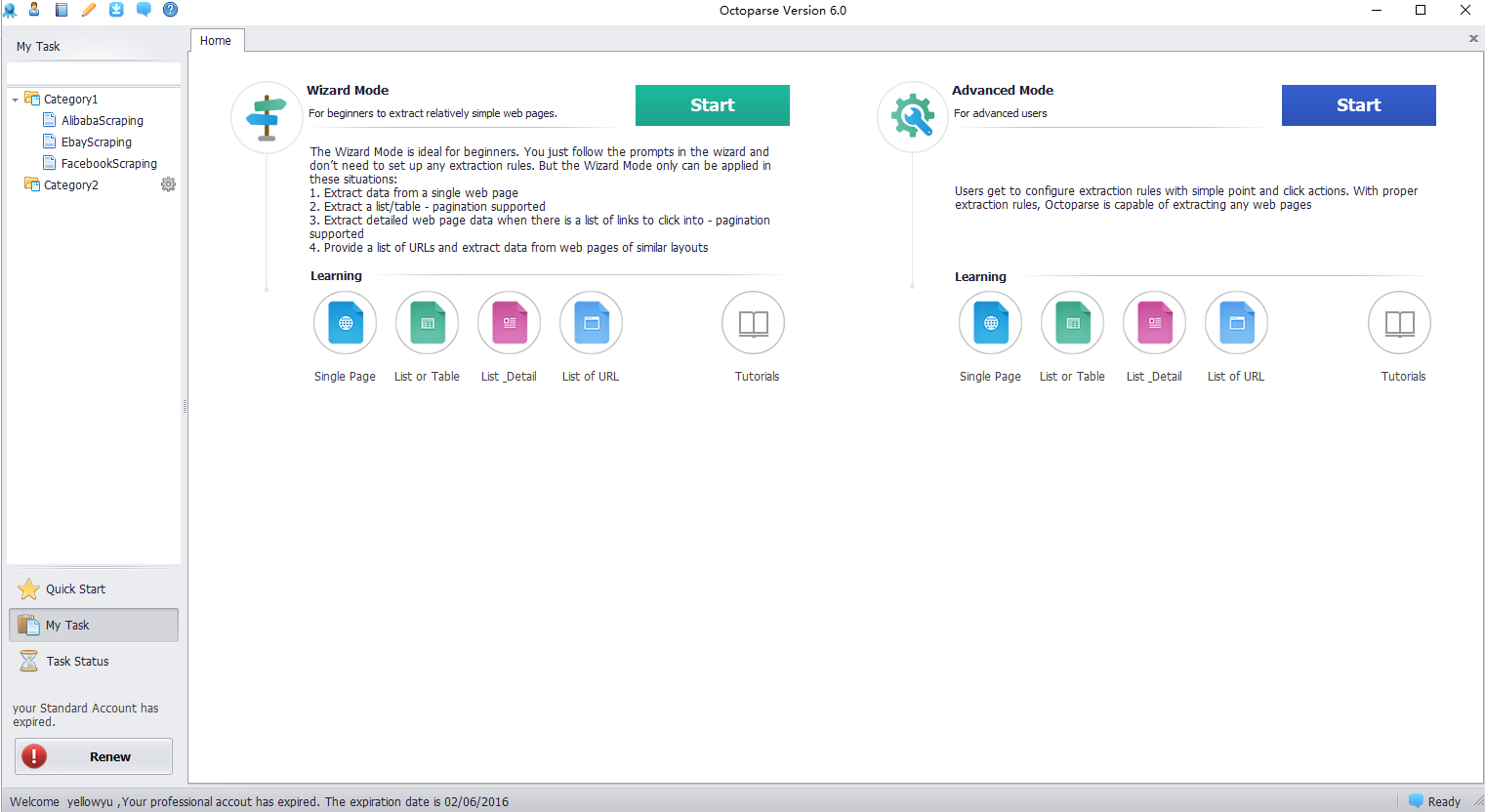

There are a bunch of decent tools out there that offer the same array of services as Webhose.io. And it can sure get confusing to choose the best from the lot. Luckily, we've got you covered with our curated lists of alternative tools to suit your unique work needs, complete with features and pricing.