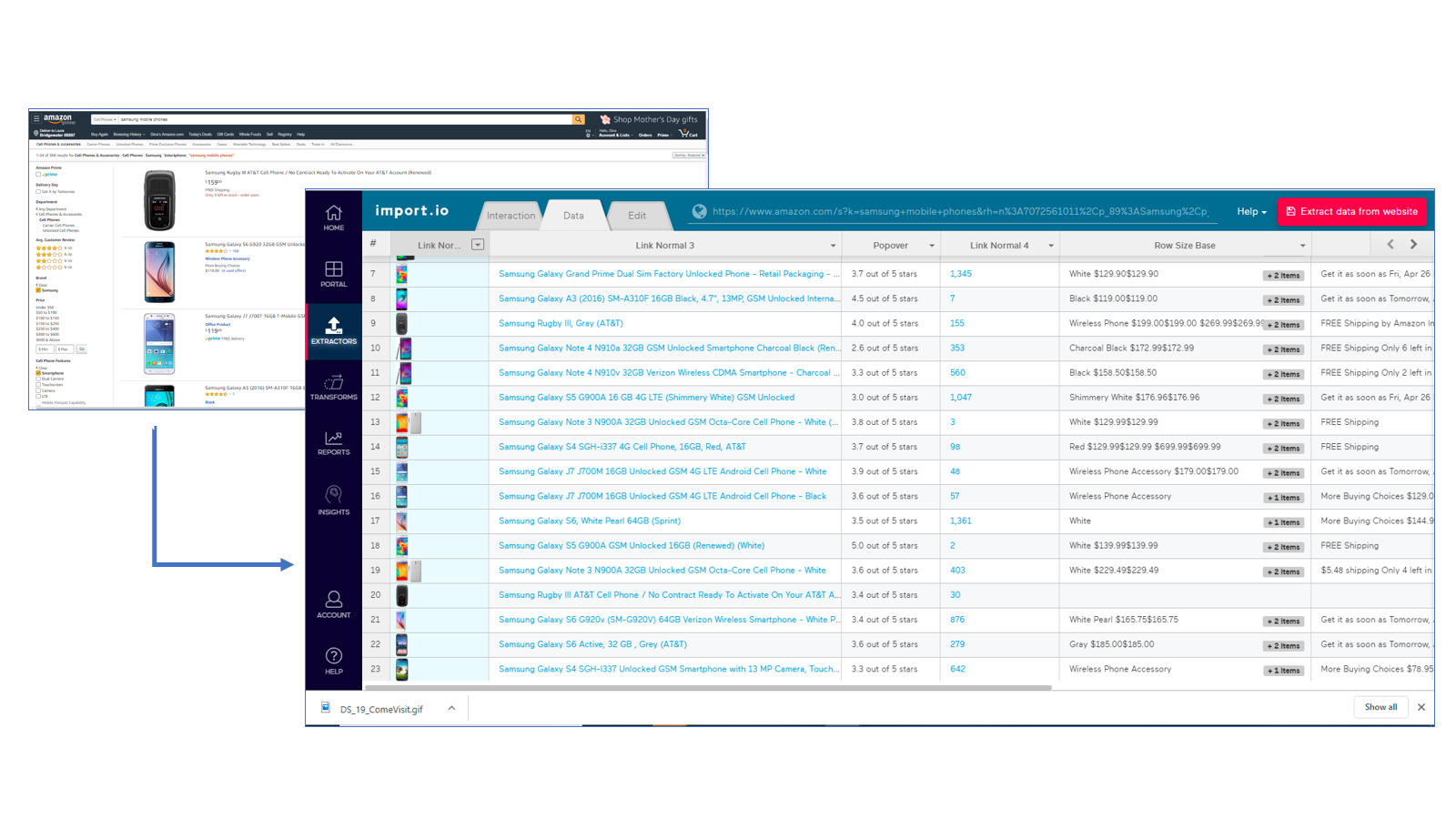

Zyte is a data extraction tool that is hosted in the cloud and supports thousands of developers in acquiring information that is helpful to them.

Your online data is delivered to you in a quick and reliable manner at all times. As a consequence of this, you are free to focus on the process of data extraction rather than on managing many proxies. Thanks to the capabilities of smart browsers and improvements in browser rendering, antibots that target the browser layer can now be easily managed.

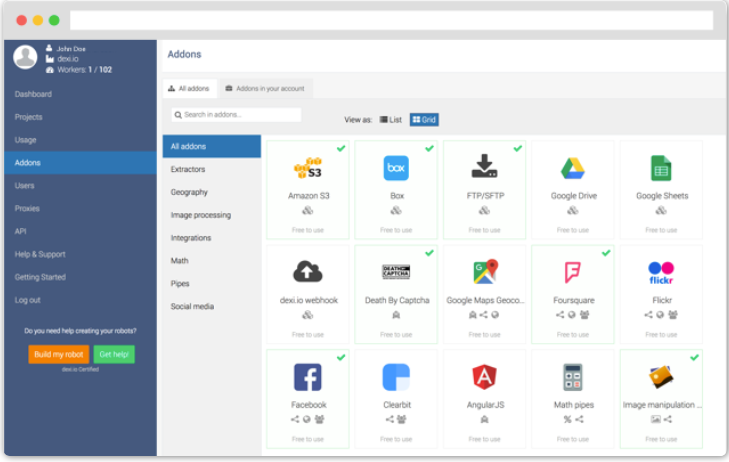

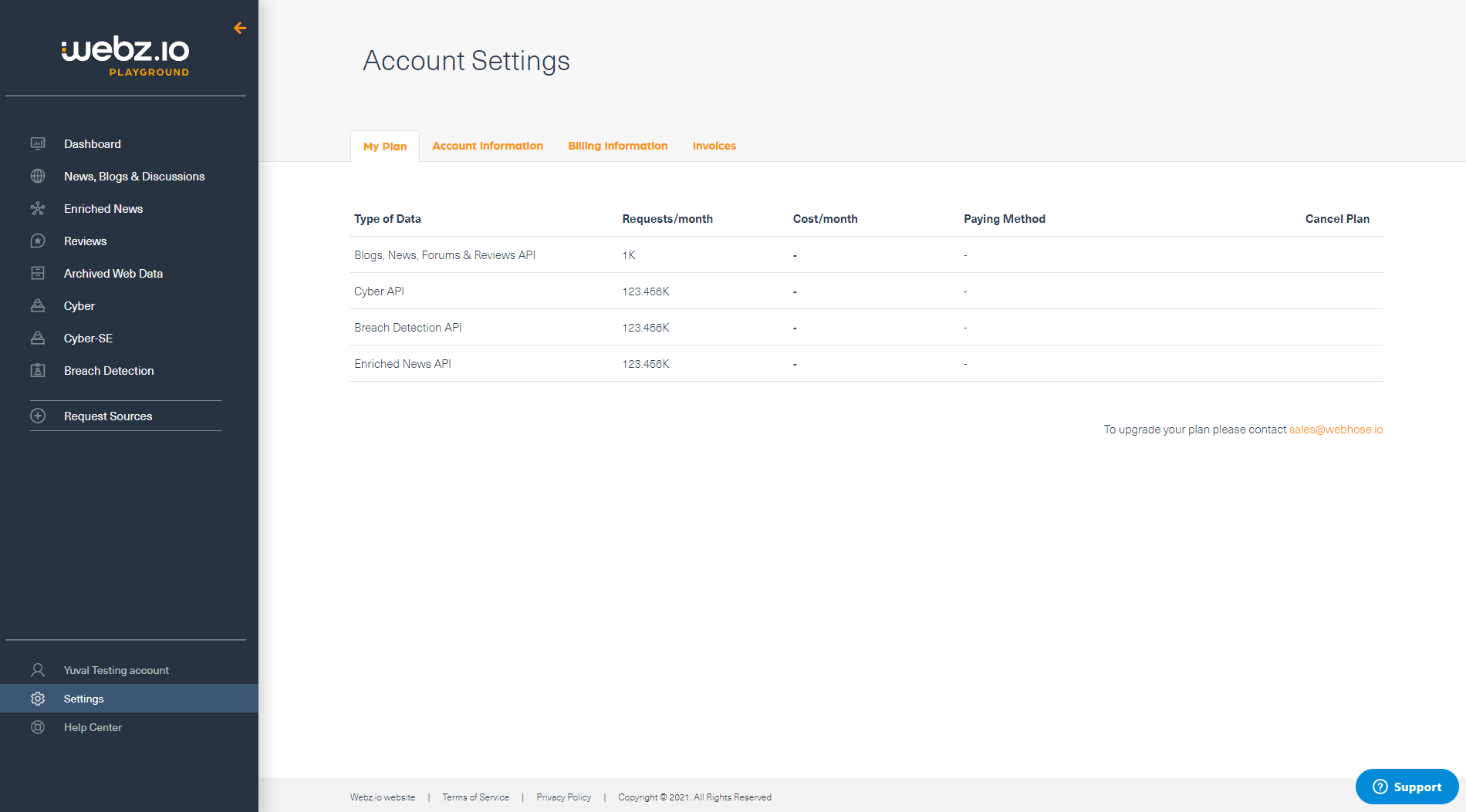

There are a bunch of decent tools out there that offer the same array of services as Zyte. And it can sure get confusing to choose the best from the lot. Luckily, we've got you covered with our curated lists of alternative tools to suit your unique work needs, complete with features and pricing.