Apache Spark is a data processing framework that can swiftly complete processing tasks on very large data sets.

Spark Core is the general execution engine that provides the foundation for the rest of the functionality that is available through the Spark platform. It offers computing in memory as well as the ability to reference datasets located in external storage systems.

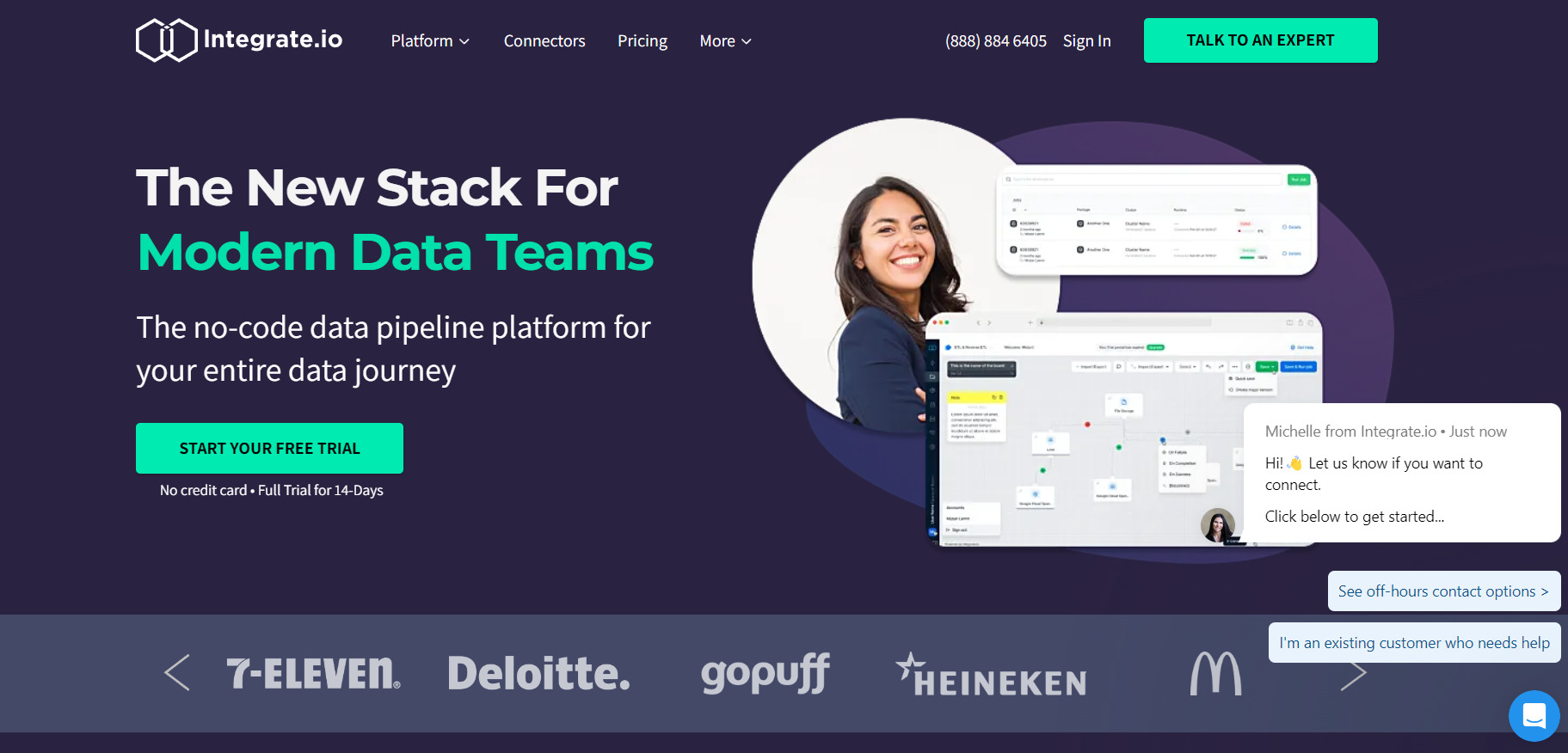

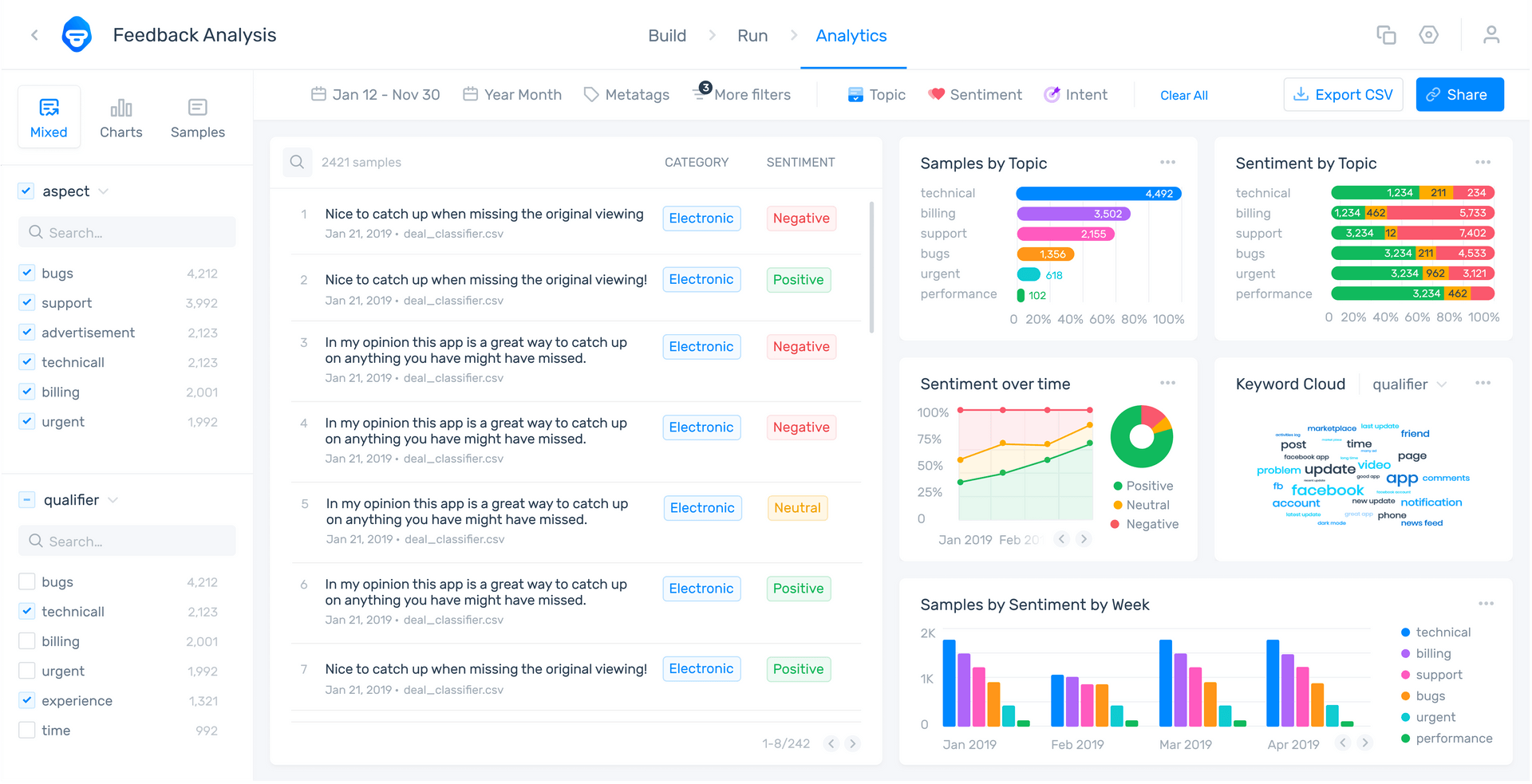

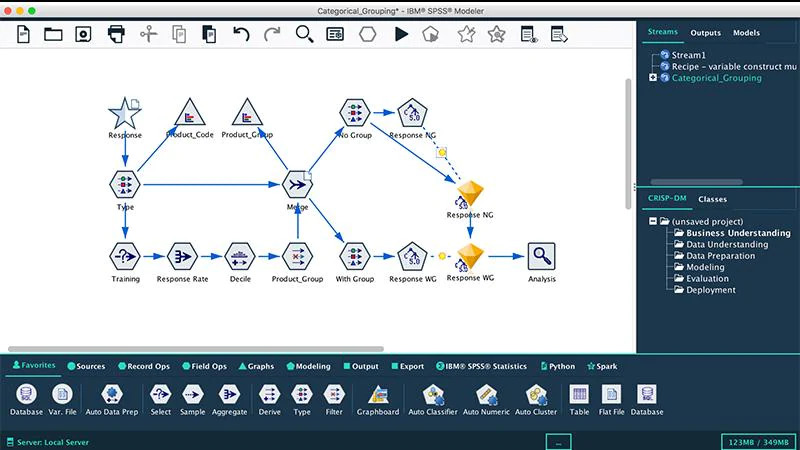

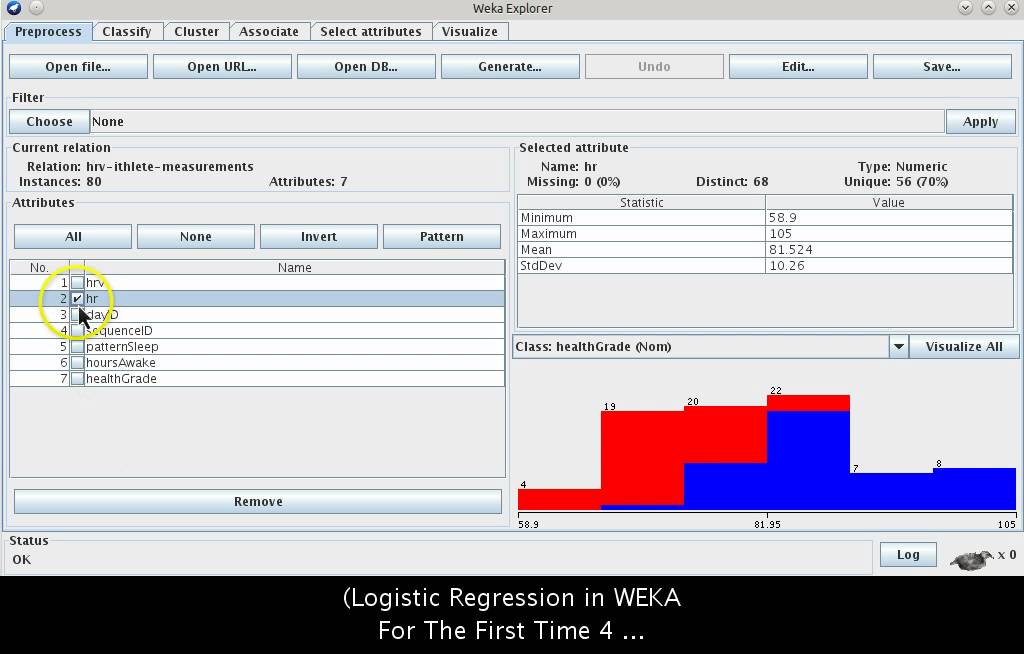

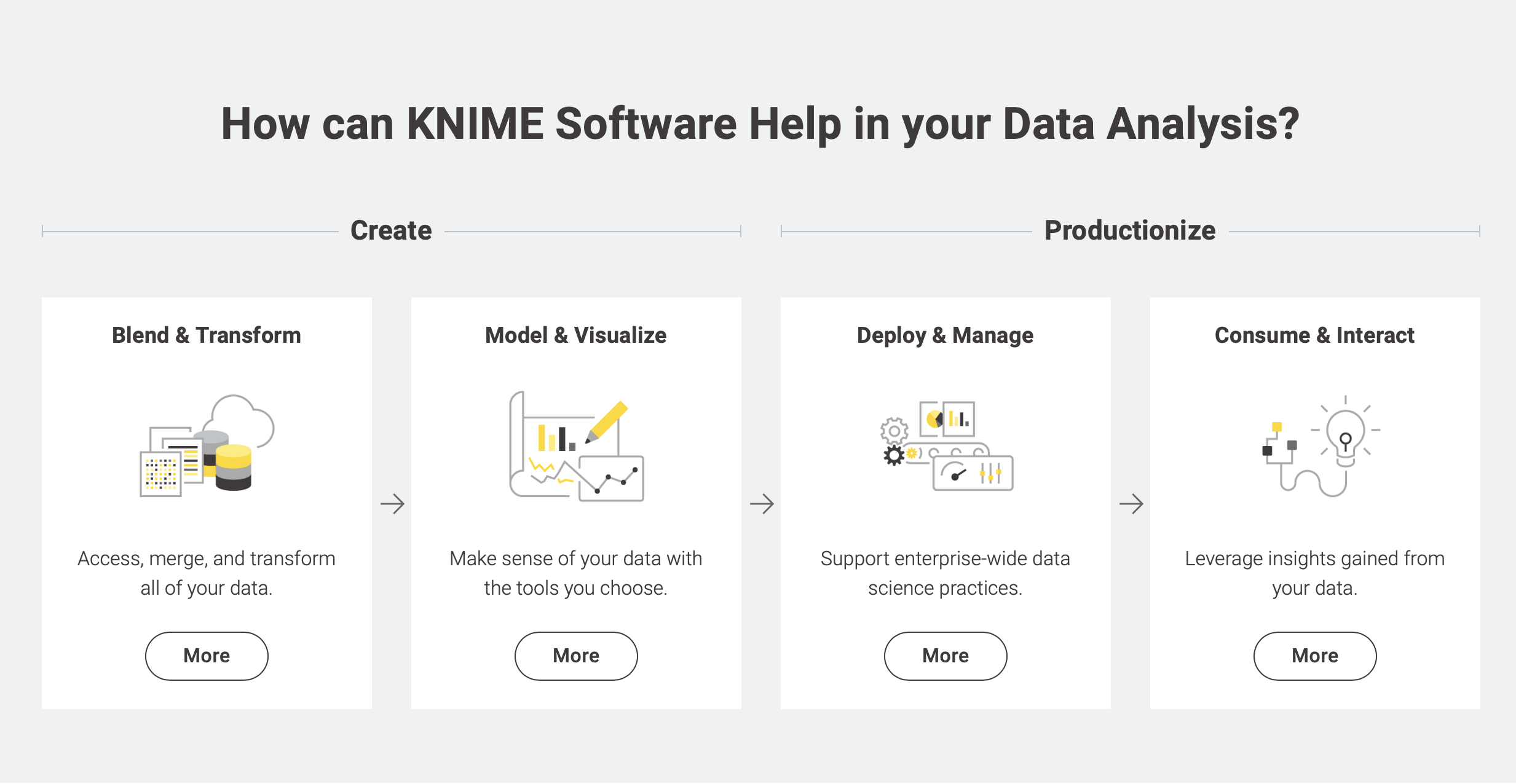

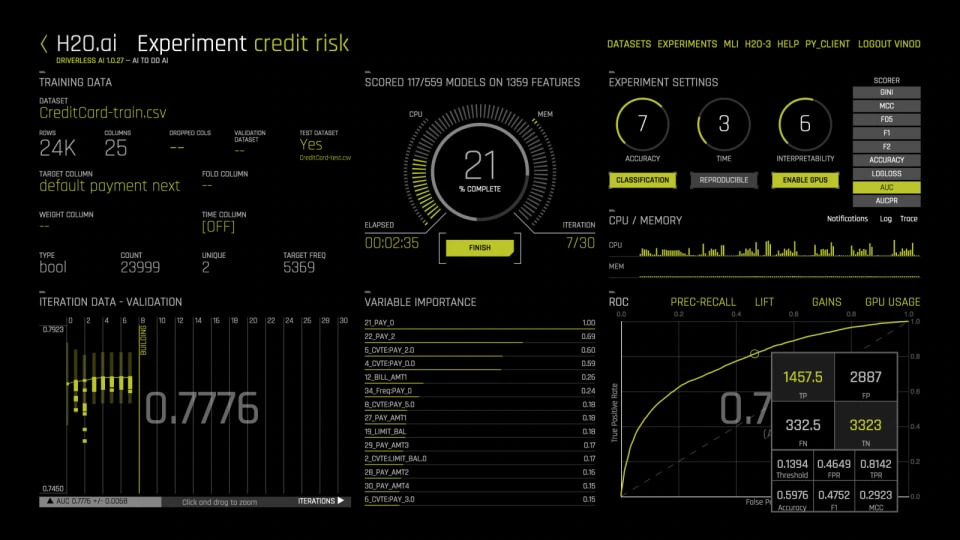

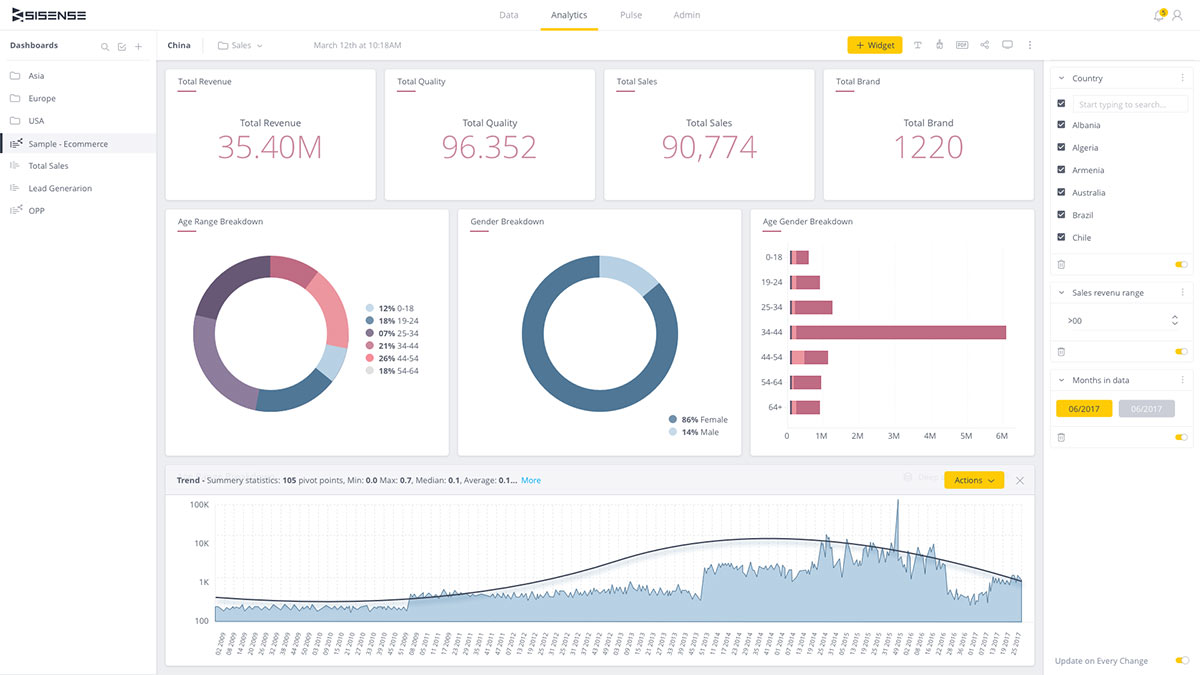

There are a bunch of decent tools out there that offer the same array of services as Apache Spark. And it can sure get confusing to choose the best from the lot. Luckily, we've got you covered with our curated lists of alternative tools to suit your unique work needs, complete with features and pricing.